TA Guide: How to Check Essays & Assignments for AI Use

This practical TA guide explains AI cheating, fair evidence review, and how GPTZero’s Writing Report supports process-based decisions.

Recently, a UNESCO global survey found one in four respondents reported that their universities had already encountered ethical issues linked to AI. A new element has been added to the role of teaching assistant these days: you’re expected to know how to check if an essay was written by AI and what to do if you find a student using AI, all while institutions are still figuring out their stances around the issue.

New technology brings new questions, but this is an immediately applicable and practical TA guide to checking essays for AI, based on all that we’ve learned through our growing community of educators and students, covering what to look for and where tools like GPTZero can help.

What do TAs Need to Watch Out For?

Teaching assistants are in a tricky position in the AI era: you’re often on the front lines without having authority over the rules. As one educator in our community said: “I am trying to find ways to get students to stop using AI for their written summaries in class and not doing any of the work themselves. Our university is not providing us with any tools to detect AI at the moment.”

While you see the most student work, you typically don’t set course policy on AI use, and you may not be the person who ultimately handles misconduct procedures.

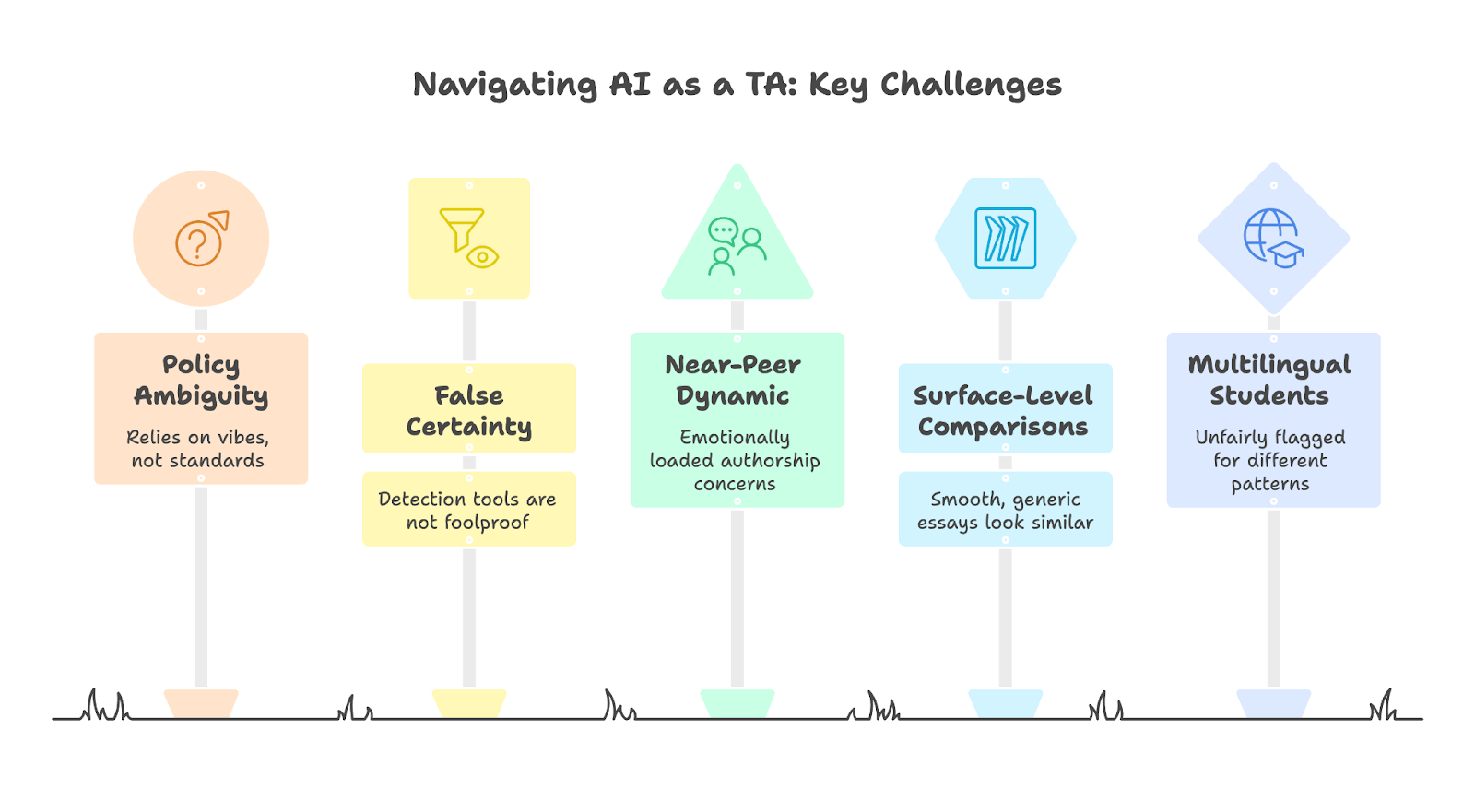

This is why the first thing to watch out for is policy ambiguity being treated like a personal problem. If your department hasn’t defined what counts as acceptable AI support (brainstorming? grammar fixes?), you can end up on a fast track to inconsistency. Before you make any high-stakes call, familiarize yourself with whatever guidance exists (even if imperfect!) and document the reasoning you used.

You also need to watch out for false certainty. While AI detectors can be useful, they can also be wrong: a high score isn’t rock-solid proof, and a low score isn’t clearance. It’s merely a data point, and it’s imperative that you treat detection outputs as one signal, and pair them with human review.

A third issue is the near-peer dynamic. Many TAs are simultaneously assessors and mentors and sometimes only a few years older than the students, which can make authorship concerns emotionally loaded. If you raise questions in a way that sounds accusatory, you risk damaging rapport; if you ignore concerns, you risk eroding fairness for students who did the work unaided.

Lastly, watch out for surface-level comparisons, as AI-assisted writing can make very different students produce essays that look similar on the surface (smooth and generic). Meanwhile, multilingual students can be unfairly flagged if their writing patterns don’t match what you expect, which is why “style” alone is a weak basis for suspicion. Later on, we’ll cover a full checklist you can use when grading.

How to Check Student Essays & Assignments Effectively

Use it as a decision support tool helping you to slow down, ground your judgment in repeatable and consistent standards, and separate what needs feedback (evidence and alignment) from what might need follow-up (process, sources, inconsistencies).

Start by checking, has the student actually answered the question that was asked? This firmly bases your review in academic standards rather than suspicion, and gives you a firm framework for how to check essays even when writing styles vary.

The next thing is to read for reasoning rather than polish, as one of the biggest shifts in how to check if an essay was written by AI is learning to separate fluency from understanding. AI-assisted writing can sound confident and impressively “academic” but still not actually answer the assignment question. Essays with sophisticated intellectual backbones usually have deliberate choices: why this example, why this interpretation, while AI-shaped work often comes with generalities.

With this checklist, you’ll see that crisp writing doesn’t automatically lead back to AI use, and awkward writing doesn’t mean a student hasn’t answered the question (especially if English isn’t their first language).

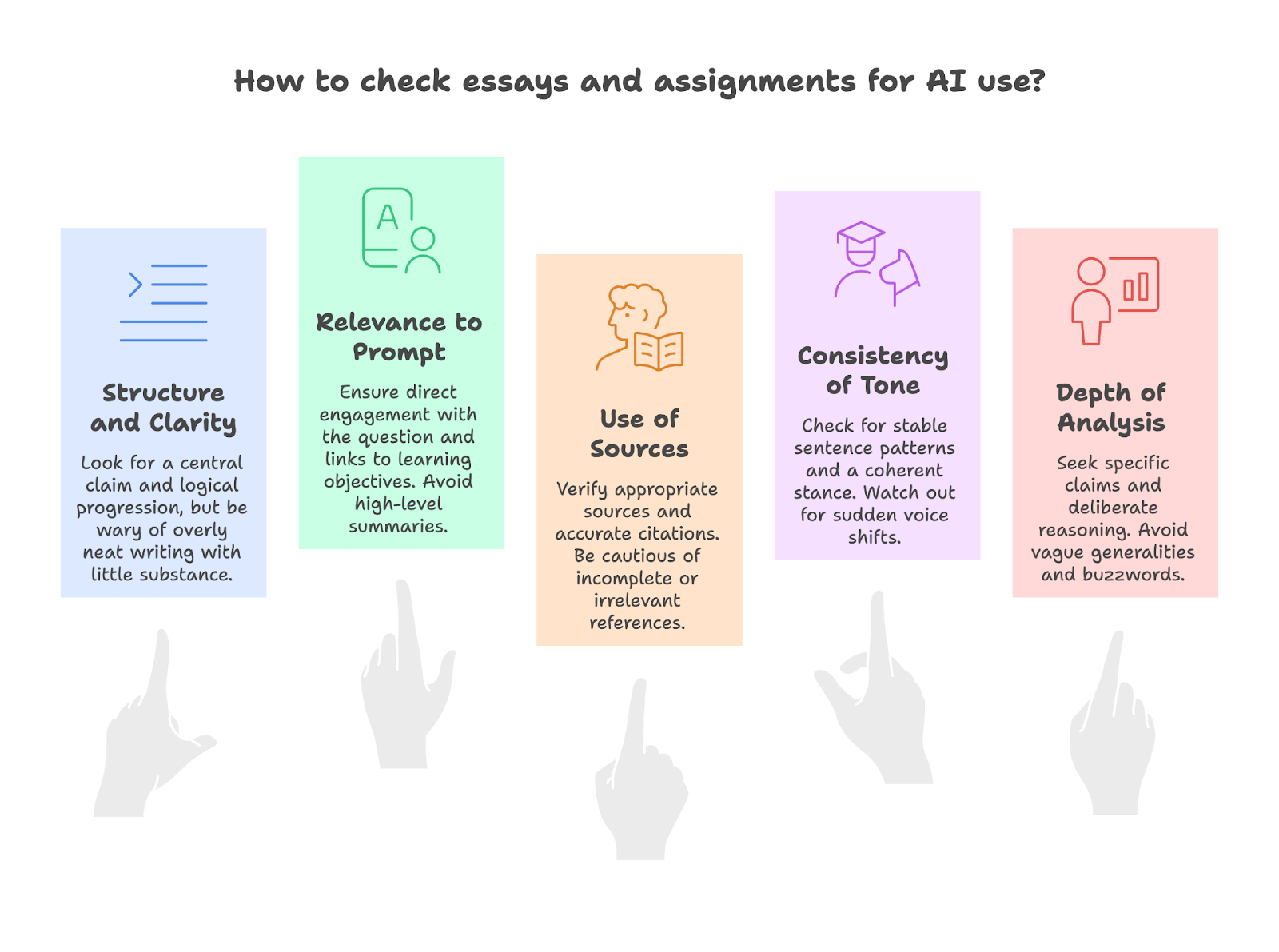

An Essay & Assignment Checking Checklist for TAs

1) Structure and clarity (Is the writing doing real work?)

Start by checking whether the essay’s structure is helping the student make an argument, as proper organization only matters if it supports actual reasoning.

What to look for

- A central claim that the rest of the essay actually supports

- Logical progression between paragraphs (not just smooth transitions)

What might need follow-up

- Writing that is overly neat: perfect structure, but little substance underneath

- Paragraphs that “sound right” but don’t add anything new (repeating the same point with different wording)

2) Relevance to the prompt (Did they answer what was asked?)

Next, remember the main point of the assignment itself: does the student directly answer the question and make choices that fit the course goals?

What to look for

- Direct engagement with the actual question

- Explicit links to the learning objectives or course concepts

- Specific choices: why this approach, this interpretation?

What might need follow-up

- A fluent, confident essay that never quite answers the prompt

- High-level summaries that avoid taking a position, or writing that feels like it’s filling space as opposed to actually making an argument

3) Use and quality of sources (Are references real and used well?)

Then look at the sources, to see whether citations exist, as well as if they’re credible and actually strengthening the argument.

What to look for

- Sources that are appropriate for the level and discipline

- Citations that match the claims being made (not “citation wallpaper”)

What might need follow-up

- Citations that are incomplete, inconsistent, or oddly formatted

- References that don’t seem to exist or don’t seem relevant

4) Consistency of tone and voice (Does it sound like one writer?)

After that, read for continuity: does the essay sound like it was written by one person with a stable voice and stance throughout?

What to look for

- Stable sentence patterns and phrasing across sections

- A coherent “stance” (how the student evaluates, qualifies, and explains)

What might need follow-up

- Sudden jumps in sophistication (“near-peer” voice shifts mid-essay)

- Sections that feel like a different author: varying rhythm and vocabulary

- Overly vague academic phrasing (“It is important to note…”, “This essay will discuss…”) in a way that overwhelms the student’s own thinking

5) Depth of analysis vs surface-level responses (Is there thinking behind the text?)

Finally, separate polish from understanding by checking whether the student moves beyond summary into specific, supported analysis.

What to look for

- Specific, well-supported claims (not just broad statements) since AI is great at producing shape but is less reliable at producing substance

- Deliberate shaping: why this example, why this conclusion

- Limits and nuance: the student can explain counterarguments or uncertainty

- Concrete engagement with course material, instead of general knowledge

What might need follow-up

- Vague general statements that sound “academic” but say very little

- Named concepts without explanation (buzzwords for the sake of it)

- Evidence listed without analysis (“quote, quote, quote” with no argument)

How to Check if an Essay May Have Used AI

This is where it’s worth mentioning that GPTZero has all the tools teachers need to promote responsible AI adoption in their classroom.We’re consistently rated the most accurate and reliable AI detector across education, publishing, and enterprise use cases. Our AI detector gives a sentence-level analysis while our Hallucination detector automatically detects hallucinated sources and poorly supported claims in essays.

GPTZero’s writing replay tool, called Writing Report, comes with its Google Chrome extension and loads recordings quickly and plays them smoothly without lag, even as documents get longer (which can be an annoying issue with other replay tools). The document’s editor(s) control access, and can view and share the report via a link or as a PDF.

Our Writing Report includes a writing activity timeline, the largest copy-and-paste events, average revision duration, writing bursts, and whether the typing history looks broadly natural or unusual.

Last year, G2 ranked GPTZero the #1 most trusted and reliable AI tool, ahead of Grammarly. Independent reviewers, including Tom’s Guide, which tested all major AI detectors, found GPTZero to be the most accurate. GPTZero has also confirmed its title as the most accurate commercial AI detector, outperforming competitors on the massive independent RAID benchmark.

GPTZero also stands apart as the only detector that explains in plain language why text appears AI-generated, with color-coded sentence-level highlights that show exactly where AI writing occurs. Importantly, we also collaborate with educational institutions through our 1,300-member Teacher Ambassador community to refine these tools in real-world settings.

(Want to contribute to AI literacy in your school? Help us teach your colleagues about Responsible AI usage through our Teacher Ambassador program. We will provide the educational resources, you do the teaching!)

What to Do as a TA If You Suspect AI Use

AI use sits on a spectrum: some students use tools lightly for spelling or grammar while others rely on AI to outsource their thinking – and that distinction matters, as a fair response depends on the extent of use and what your course actually allows.

If you suspect AI use, the main thing to bear in mind is pattern recognition rather than any single “tell” as warning signs often show up as inconsistencies: sudden shifts in tone or fluency, writing that feels polished but generic, overly formal phrasing that doesn’t match the student’s usual level, and work that summarizes correctly but avoids specific examples or nuanced insight.

Source issues can be another big clue, with references that don’t exist or oddly formatted bibliographies. As one grader shares: “I usually catch AI use on the typical giveaways, like that the responses cite papers we didn't assign or don't exist.”

The most effective conversations begin with the questions that probe understanding:

- Can the student explain how they developed a paragraph or argument?

- Can they walk you through the sources they used?

- Why did they choose this example or interpretation? (Students who actually did the work can usually describe their thought process, while students who outsourced it often struggle to do so, even if the writing sounds confident.)

GPTZero analyzes language patterns to estimate how likely a passage is to be machine-generated, but detectors aren’t flawless and accuracy often depends on text length. False positives are more common in shorter submissions, formulaic prompts, heavily revised work, and multilingual writing. This is why the safest approach is triangulation, when you combine detector signals with your professional judgment.

When a student denies using AI, share what prompted your concern, invite them to explain their process, and treat ambiguity as a cue to clarify expectations around originality and transparency. Ultimately, consistent processes and empathetic questioning protect academic integrity and the learning relationship, especially while institutional policies continue to evolve.

Conclusion

The policies around AI use are still in works-in-progress, but for now, the above checklist can help you find your way through this complicated new landscape. Use the checklist to focus on what matters most: has the student answered the question, can you see critical thinking, and does the work hold together as something a student can explain. Remember that you don’t have to do all the work around AI detection alone: use GPTZero’s AI Detector or Writing Report as tools to help lighten the load.

FAQ

- Can teaching assistants prove AI use in essays? Usually not from the text alone, as AI signals are imperfect: the fairest approach is linking all the evidence and escalating only via your course’s formal procedure.

- How should a TA talk to a student about suspected AI use? Keep it calm and process-led: ask them to walk you through how they developed the argument, used sources, and drafted key sections, rather than leading with accusations.

- What counts as misconduct when AI is involved? It depends on your course policy, but it typically means using AI to replace the student’s own thinking or submitting AI-generated text without disclosure when disclosure is required.