Why Publishers and Editors Should Be Concerned about AI-Written Content

Why editors and publishers should beware of a new category of faux writers using AI, and the threats to quality control and reputation that AI-generated content creates.

More and more writers are using AI tools to elevate their work – it would be hard these days to find a writer who isn’t using Grammarly or a similar tool to refine their sentence structure. While AI can accelerate the writing process for human writers, it has also given rise to a troubling trend: machine-generated text is starting to threaten everything that makes human writing valuable.

It used to be a given that when you bought a book, there was no question that someone (usually the author) wrote it. Now, a new category of faux writers can use AI to self-publish fake biographies and travel guides, rampant on platforms like Amazon. This raises crucial questions for publishers and editors, who are the traditional gatekeepers of quality and authenticity.

Here, we look at the threats to quality control that AI brings – and how this could go on to impact reputations, and why it is the responsibility of publishers and editors to learn about tools like GPTZero to help them going forwards.

How AI-Generated Content Threatens Trust

The Washington Post recently highlighted the case of tech journalist Kara Swisher, who discovered dozens of AI-generated knockoff biographies of herself on Amazon. This isn't an isolated incident. Similar reports of AI-generated impostor books, misleading “companion” guides, and fake biographies are becoming increasingly common. This misrepresents experts, misleading readers, and dilutes the credibility of genuine authors.

While platforms like Amazon have implemented some AI content detection measures, their enforcement on AI-generated content seems inconsistent and reactive, which leaves many authors frustrated as well as vulnerable. This has led authors and industry advocates, like the Authors Guild, to call for more stringent vetting processes and clear AI labeling (i.e. there’s now a label to mark that a book has been written by a human).

This explosion of AI-generated content casts a dystopian shadow over established authors and the publishing industry as a whole. A writer may have spent years and years building their reputation – only to have AI-generated books with their name (or a slightly altered version) appear, confusing readers and diluting the author’s brand. This can have a long-lasting stain on an author's credibility and break the trust they've worked so hard to build.

A Quality Control Crisis

For writers, relying on AI-generated content can be a reputational minefield. While it can seem (by the very nature of its name) that generative AI creates, the reality is that it remixes. It pulls from existing data without true understanding or intent, often leading to homogenization and a loss of the nuance that makes human writing compelling. AI-generated content tends to be formulaic, lacking the depth, emotional resonance, and personal perspective that connect with readers.

This creeping homogenization presents an existential threat to the publishing industry. If every book, article, or blog post starts to sound the same, readers will have little incentive to engage. The unique voices and perspectives that drive readership and cultural discourse will be lost, replaced by a bland, AI-generated echo chamber.

Right now is an important inflection point where publishers and editors must recognize that literary culture depends on diversity of thought and authentic expression. Failing to educate themselves on AI means that AI could start to determine more creative output, which would ultimately devalue human creativity and weaken the publishing industry’s foundations as a whole.

Copyright Issues

AI-generated content throws a spanner into the works of traditional copyright law, with a complex and pretty murky legal landscape. At the heart of the issue is the question of authorship: if AI generates a text, who owns the copyright? Is it the AI itself (a legal impossibility in most jurisdictions), the programmer who created the AI, or the user who provided the prompts?

Current legal frameworks, designed for human creators, struggle to accommodate the unique nature of AI-generated works. This ambiguity creates a gray area where ownership and infringement risks become difficult to define.

There’s also the complexity in the way AI learns: by analyzing and then – as mentioned – remixing vast datasets. This process raises concerns about potential copyright infringement, even if unintentional. AI-generated content might inadvertently reproduce elements of copyrighted material, or the AI's training data itself might contain copyrighted works without proper licensing.

This means publishers need to be extremely cautious when using AI-generated content, do their due diligence to avoid legal pitfalls and maintain transparency with their audience about the use of AI in their publications.

The Publisher's Responsibility: Proactive Adaptation and Clear Guidelines

AI will disrupt publishing but the key is to proactively adapt, not simply react. Publishers and editors need to take a leadership role if human writing is to be preserved. This includes the need to:

Establish Clear Guidelines

Publishers need transparent and enforceable policies regarding the use of AI in content creation, addressing issues like transparency, accuracy, bias, and copyright. Readers deserve to know when they are consuming AI-generated content, and if they’re misled, this will damage their trust in publishers and the information they provide.

Leverage Instead of Ban AI

AI can be a useful tool for writers and editors, but it needs to be used to enhance (and not replace) human creativity. Instead of blanket bans, publishers should explore AI’s potential to improve efficiency while keeping human originality at the core. Publishers need to make sure that human writers remain at the forefront of creative storytelling, and use AI to improve (not substitute) their communication abilities.

Protect Quality Control

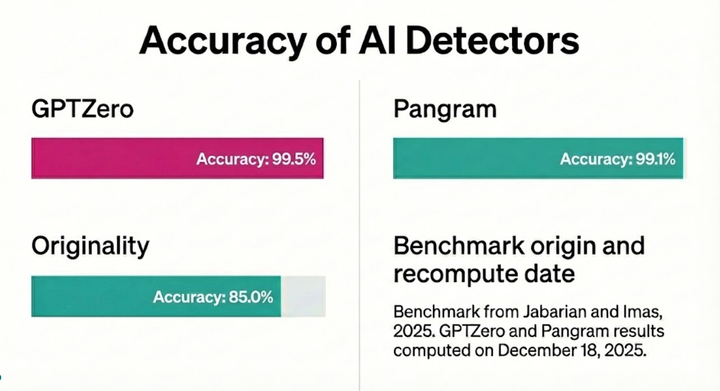

Editors need to be vigilant in reviewing AI-assisted work and realise that preservation of the author’s distinct human voice is an important part of the next chapter of publishing. Being able to tell the difference between AI-generated content and human writing and preserve an author’s unique voice will be a hugely valuable editorial skill going forwards. Publishers are the gatekeepers of choosing which voices reach an audience, and their role is weightier than ever.

Human storytelling is an art form that remains the beating heart of the publishing industry. If publishers and editors don’t take responsibility for making sure this lives on, the future of literature risks becoming a massive algorithmic output.

If left unchecked, the proliferation of AI-generated content risks homogenizing creative output, diluting originality, and ultimately diminishing the value of human artistry. This is why publishers and editors need to care about AI-written content – because the future of human artistry depends on them doing so.