The Next Generation of Turing Tests

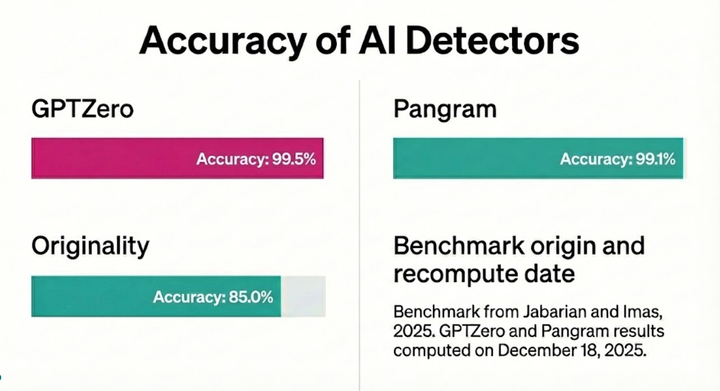

On Friday, September 15, the GPTZero research team ran a human study with more than fifty AI researchers from the University of Toronto Vector AI Institute and affiliate groups. The results were conclusive that even the researchers most familiar with AI language models are unable to discern patterns of AI writing without GPTZero assistance.

The study started with members of the GPTZero machine learning team attending the Vector Institute for AI career fair, alongside a variety of companies pitching why the next generation of AI talent should work for them. The atmosphere was electric around the GPTZero booth with engineers and researchers curious to hear more about what we do, and the methodologies we employ to achieve our goals as an organization working to preserve the value of human writing.

The most common questions that attendants asked were about the type and scale of data we have, as well as the machine learning architectures leveraging that data to discern human vs AI generated text. We have been scaling our models in size so that they can leverage an ever-growing corpus of text covering a variety of domains including news, essays, social media, and encyclopedia articles. Our dataset size increased by a factor of 22 since August, enabling the use of larger transformer models.

Upon reflection, these models, similar in some ways to large language models, have seen more text than a human can hope to process in a lifetime. In fact, it is challenging for a human to tell the difference between human and AI text. Contrast this to other segments of the AI tech landscape such as autonomous vehicles, or computer vision APIs. Vision problems and control problems are where humans excel. Although AI exceeds the performance of human vision on some benchmarks, it can still lag behind in terms of robustness to some image corruptions, not to mention adversarial attacks. It may be feasible to fake good performance by having say a group of humans act as a replacement for an ML AP or a human remote control a car. There are notable examples of this happening in companies that claim to automate app building or offer chatbots. Unlike these applications, having an army of human workers decide if a document was written by a human or AI is unlikely to be useful as humans are notoriously ineffective at this task.

How bad are they?

We sought to answer this question at the career fair by hosting a research study. We had 5 different passages that were each approximately a paragraph in length, 2 of which were written by humans, and 3 of which were AI generated.

To incentivize participants to perform well, the prize for the highest score was a guaranteed interview for one of our Founding Machine Learning Engineer positions: a posting that has received over 700 applications. Figure 1 shows the distribution of scores. There were 57 respondents, only 3 of which had a perfect score, with the majority of participants making 2 or more errors.

The following passage written by one of the members of our ML team was the most difficult for participants:

There was a time when large language models ruled the world. Those days are long gone now. Instead, carefully-tuned, sparse fully-connected networks are more widely adopted. This of course has been to the detriment of many AI companies and researchers who have devoted their time to large models. This information can be used when deciding which research area to pursue. For example, there are many subfields that can be excluded based on the above criteria.

Figure 1: Distribution of Respondent Scores

Only 44% of participants correctly guessed that the text was human generated. GPTZero makes the correct prediction on this text owing to the fact that our prediction thresholds have been tuned to limit false positive predictions. This is becoming increasingly important in domains such as education where it is desirable to avoid unnecessary accusations of cheating. Our holistic approach which includes the writing report and evaluates the writing process itself further reduces the risk of false positives, and is particularly helpful for texts that are ambiguous.

Statistics for all 5 passages can be seen in Table 1. Overall, these results confirm the need for the next generation of Turing tests.

Table 1: Accuracy for each passage.

Originally, Alan Turing proposed the use of a human evaluator to conduct a blind study in which there is a human participant and a machine participant. The goal for the evaluator is to determine which is human and which is AI by asking a series of questions. This was a fascinating idea at the time, though it is becoming apparent that the natural language generation and reasoning abilities of LLMs are powerful enough to fool human evaluators.

One of the missing details in Turing’s proposal is who the human evaluator should be. What level of intelligence should they have? How old should they be? At GPTZero, we face a similar question, but instead of evaluating intelligence, we are searching for subtle signatures in writing that are peculiar to LLMs. Our goal is to do this in an objective, data-driven way that evolves with how English changes over time. The retraining procedures, and data generation efforts done by our ML team are a key piece in this shifting puzzle, and enable us to stay one step ahead of LLMs as they play the imitation game.