Plagiarism vs. AI Plagiarism: What’s the Difference (and Why It Matters)

If you’re a student trying to maintain your academic integrity or an educator working out your policies, understanding this topic matters more than ever.

New tools are changing old rules, and there are still plenty of grey areas between traditional plagiarism and what many now call “AI plagiarism.” At GPTZero, we hear the same questions again and again: Is using ChatGPT cheating? Is AI plagiarism? What actually counts as misconduct?

Here, we look at plagiarism vs. AI plagiarism, the true meaning of AI plagiarism, and how institutions interpret ChatGPT plagiarism. If you’re a student trying to maintain your academic integrity or an educator working out your academic integrity policies, understanding this topic matters more than ever.

What Is Plagiarism?

Traditionally, plagiarism has meant using someone else’s work or ideas without giving proper credit, typically copying from a book, website, or another student. There are new types of plagiarism becoming more common but the core principle hasn’t changed: the work must reflect who created it. If it’s not your work, and you submit it as your own, that’s plagiarism.

Even though tools like ChatGPT generate text rather than copying from a single source, if an assignment is written by a tool and submitted as a student’s own work, without permission or disclosure, that is usually treated as academic misconduct.

This can include copying without attribution, the most obvious form of plagiarism, which includes copying text or concepts directly from a source without citation. Even short passages can be flagged if they aren’t properly credited, and we like this definition from San José State University: “The act of representing the work of another as one’s own without giving appropriate credit, regardless of how that work was obtained, and submitting it to fulfill academic requirements.”

What is AI plagiarism?

A major issue with generative AI: it can repeat content verbatim or near-verbatim, even when it’s meant to be original. In fact, a February report by Copyleaks and reported by Axios found nearly 46% of tested ChatGPT-3.5 outputs contained identical text, with many others showing lightly edited or poorly paraphrased material. While newer models seem to have reduced this behaviour, it still happens (and it’s one way creators discover their work has been used to train AI systems).

Another issue is attribution, as many AI tools don’t cite sources at all, while others provide citations inconsistently (or outright incorrectly!) When AI-generated content includes facts without reliable attribution, students can end up submitting work that appears unsupported or misleading, which of course raises academic integrity red flags.

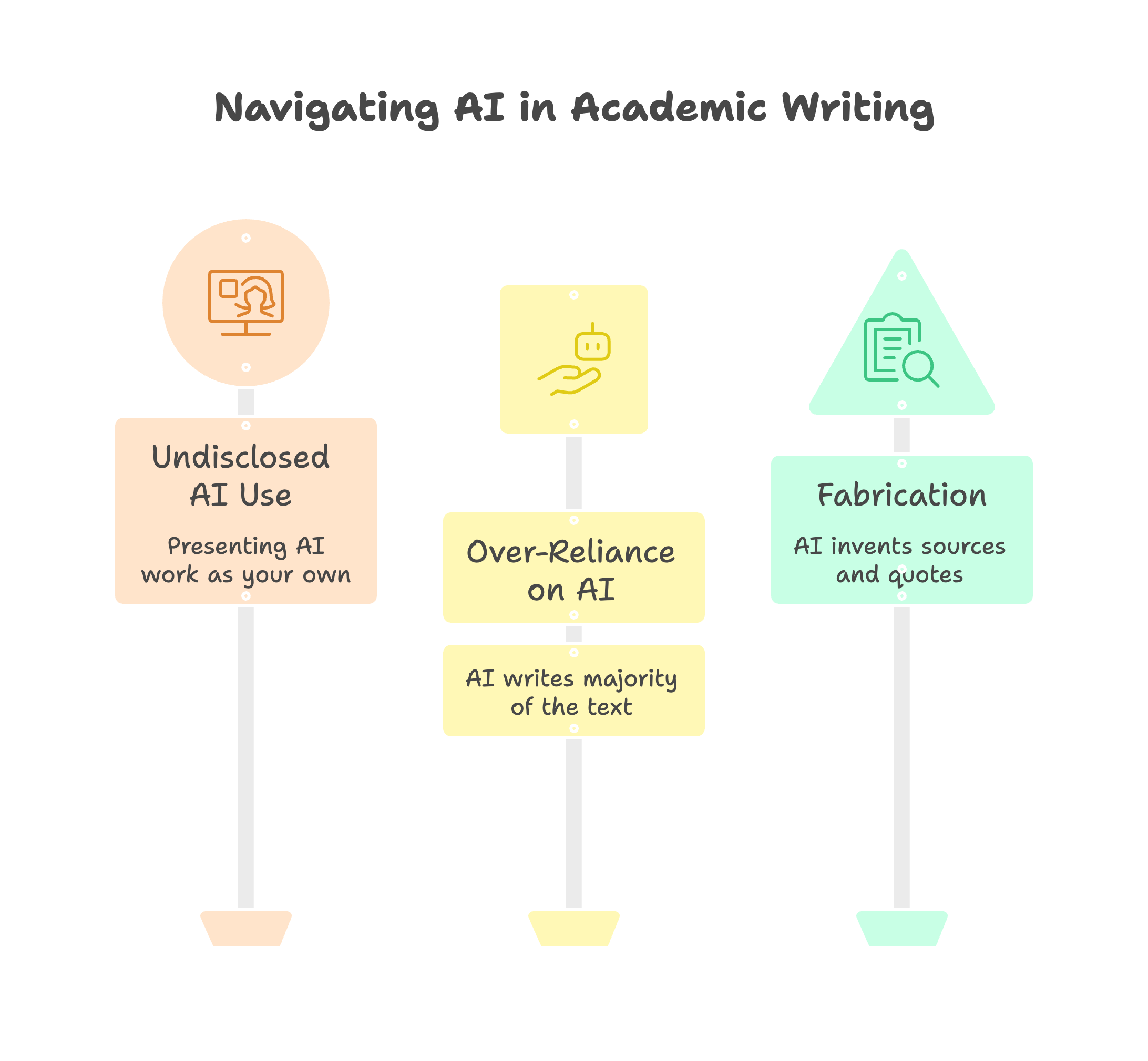

Using AI without disclosure

While every institution has their own AI policy, generally, most define plagiarism as representing someone else’s work as your own without proper credit, no matter how that work was created. This means that putting forward AI-generated writing without permission or disclosure definitely counts as plagiarism.

As one department at the University of Cambridge explains: “[We] will not distinguish between the input of AI and the input of another human being in drawing the line between permissible help and plagiarism. A level of assistance that would be inappropriate if received from a person will also be inappropriate if received from AI.”

Broadly speaking, academic work is expected to reflect your own unique thinking and individual effort. Work created primarily by AI typically doesn’t meet that standard, and it doesn’t even matter which specific AI tool you use: if AI authorship isn’t allowed, using any generative system to produce your paper can be considered misconduct.

As the University of South Florida explains, “If you choose to use generative AI technology for writing, be sure you are transparent about your use of it with your teachers, publishers, and audience. The ethical use of generative AI depends on the context in which it is being used and the expectations of the individuals or organizations involved.”

Over-reliance on AI

In some cases and at some institutions, using AI for brainstorming or editing can sometimes be allowed. However, when AI primarily writes the structure or majority of the text, it becomes difficult for you to claim authorship, especially if that use isn’t disclosed.

This includes patchwriting, which is when AI output closely mimics source material, and you stitch together lightly modified phrases: even when it seems original on the surface, this kind of writing can violate plagiarism rules.

Fabrication

The extent to which AI systems can invent sources or quotes might surprise you, because the tools can be so incredibly confident when doing so. But submitting fabricated references or false information, even if it’s totally unintentional, is treated very seriously by most institutions and can lead to academic penalties.

Just recently, The Times found that a book published by one of the world’s largest academic publishers was found to contain dozens of fabricated citations, including references to journals that don’t exist. In some chapters, more than half of the cited sources couldn’t be verified. Experts reviewing the work concluded that the simplest explanation was AI-generated material producing hallucinated references (“hallucitations”), referring to invented titles and journals which were strung together to seem like actual scholarship.

💡 Need to check your information? Use GPTZero’s Hallucination Detector to quickly find sources from text, essays, or research papers.

Plagiarism vs. AI Plagiarism: The Big Differences

Grey Areas Students get wrong

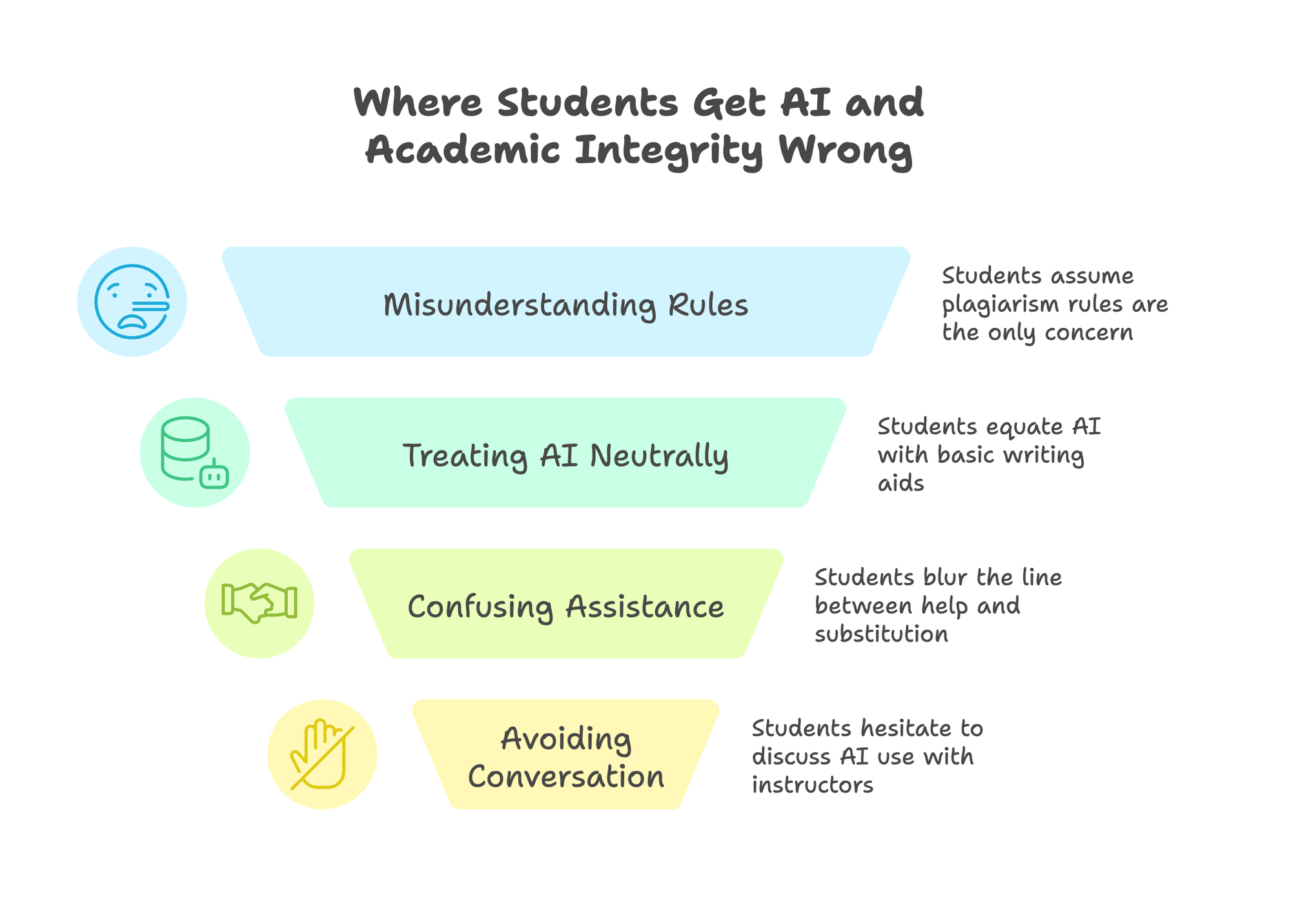

When it comes to AI and academic integrity, the fundamental thing to remember is the line between tools that support intellectual heavy lifting, versus tools that replace it. One of the best takes we’ve seen on this topic is by Joshua Gross, Associate Professor of Computer Science at CSU Monterey Bay, who shared the following mistakes he sees.

“If it’s not plagiarism, it must be allowed”

One of the most common errors students make is assuming that plagiarism rules are the only rules that matter: if nothing was copied from a book or website, it can feel like no harm was done, right? But as Gross puts it: “It’s not plagiarism. It may be an academic integrity violation.”

In many courses, the issue is around whether the submitted work reflects the student’s own thinking, as AI can violate that expectation even when the text appears original.

Treating AI like a neutral writing assistant

Students often compare AI to tools they’ve always been allowed to use, like spellcheckers or grammar tools, and while in some cases, that comparison holds (AI can suggest improvements or help students think through problems), the similarity ends when AI starts building the work itself.

Gross notes that AI can “provide the same kind of guidance that a writing tutor could offer,” but only within set limits: once AI begins restructuring arguments or supplying ideas the student didn’t arrive at independently, the issue of authorship becomes a lot murkier. This is where many students overstep without realizing it.

Confusing assistance with substitution

Another grey area is how much help is too much, as brainstorming a topic is different from drafting an essay, and editing a sentence is different from rewriting a paragraph. Gross makes this distinction explicit in his own teaching: “I tell my students they are allowed to use ChatGPT to help them debug their code and solve problems… I do not allow them to have ChatGPT write code for them.”

The same principle applies across disciplines: AI can help with some of the preparation for the work, but it shouldn’t create or replace the actual work the assignment will assess.

Avoiding the conversation altogether

Even when students want to do the right thing, asking about AI can feel risky as some instructors are comfortable discussing AI while others are definitely not. Gross points out that if the question is framed poorly, it may sound like: “Am I allowed to have a computer program write my essay.”

Instead, his advice is to approach the topic early and informally, often during office hours, and to come across as genuinely curious rather than seeking permission. The simplest guidance he offers is also the most useful: “If it’s something you wouldn’t discuss with your professor, then it’s certainly not a good idea.”

Is Using AI always cheating?

Let’s remember that most schools are not as concerned about the tool itself, as they are about authorship and learning. If an assignment is meant to evaluate your ability to think critically or develop an argument then submitting purely AI-generated text as your own work certainly crosses the line because AI hasn’t supported your learning: it's replaced it.

Then again, AI use exists on a spectrum, and many instructors are happy to allow students to use tools like ChatGPT for things like grammar checks, similar to how students have long used spellcheckers. In that case, AI functions as support, instead of a substitute for original thinking.

However, the devil is in the details, and if AI writes large portions of your essay or produces content you don’t fully understand, it becomes difficult to claim authorship, even if the ideas feel “yours.” This is where accusations of AI cheating tend to happen.

How Schools Detect Plagiarism vs AI-Written Work

Plagiarism detection is about identifying textual overlap and most schools use a plagiarism checker like GPTZero that compares student submissions against large databases of published writing and prior submissions. When there is an overlap, these tools create a report highlighting matched passages and possible sources and then instructors review it with context in mind.

A high similarity score doesn’t automatically mean plagiarism. Quotations, references, common phrases, and properly cited material can all raise similarity percentages without indicating misconduct, and this is why plagiarism reports are meant to be reviewed by instructors, not treated as automatic verdicts on their own.

The reports are meant to be paired with the human judgment of instructors, who will be able to decipher sudden shifts in writing style, vocabulary that veers from a student’s previous work, or arguments that appear disconnected from class discussions. In many cases, plagiarism concerns emerge from patterns, instead of just a single red flag.

How GPTZero can help

Unlike the type of plagiarism checker which look for copied source material, GPTZero focuses on how a piece of writing was produced, with AI detection models analyzing linguistic patterns that are more common in AI-generated text, helping educators assess whether AI may have played a significant role in the writing process.

One of GPTZero’s most important features is the Writing Report. Instead of judging a final submission in isolation, the Writing Report shows how a document was written over time: when text was typed, edited, pasted, or revised. Educators use it to see how a document came to be, while for students, this can be powerful evidence of authorship, as drafting organically and developing ideas incrementally all leave a visible trail.

For educators, GPTZero works best as part of a layered approach. No single tool can determine intent or integrity on its own. As education leader Anna Mills has put it, educators can integrate AI detection as part of an approach like “Swiss cheese”, a term often used in safety science.

💡 Use GPTZero’s Writing Report to document your authorship and avoid misunderstandings.

Conclusion

It’s easy to get confused around plagiarism versus AI plagiarism, as the distinction is primarily around whether the submitted work reflects a student’s own thinking. Traditional plagiarism asks, Where did this come from? AI-related misconduct asks, Who actually did the work?

This is why disclosure matters so much: using AI to support the research stage may be acceptable in some settings, while using it to structure an assignment without permission often crosses the line. The safest approach is to talk openly with instructors when expectations aren’t straightforward. While the tools might continue to change, the underlying principle of academic honesty and integrity remains the same.

FAQ

- Is AI plagiarism the same as plagiarism? Not exactly, although they’re closely related. While traditional plagiarism focuses on copying or reusing existing material without credit, AI plagiarism is usually about authorship, as in, submitting work written by a tool as if you wrote it yourself. These days, many institutions treat undisclosed AI-written work similarly to plagiarism because it misrepresents who did the work.

- How should students cite AI tools? This depends on your institution’s policy: some schools require students to explicitly disclose AI use (for example, noting that ChatGPT was used for brainstorming or editing), and others totally ban AI use altogether. When citation is allowed, the key is to state how the tool was used and make sure the final content is your own. Read more here.

- How do teachers check for AI plagiarism? Educators often use a combination of AI detection tools, a plagiarism checker, and their own judgment based on a student’s previous work. AI detectors are meant to offer context, as opposed to definitive conclusions, which is why conversations and evidence of process often matter just as much as the final score.