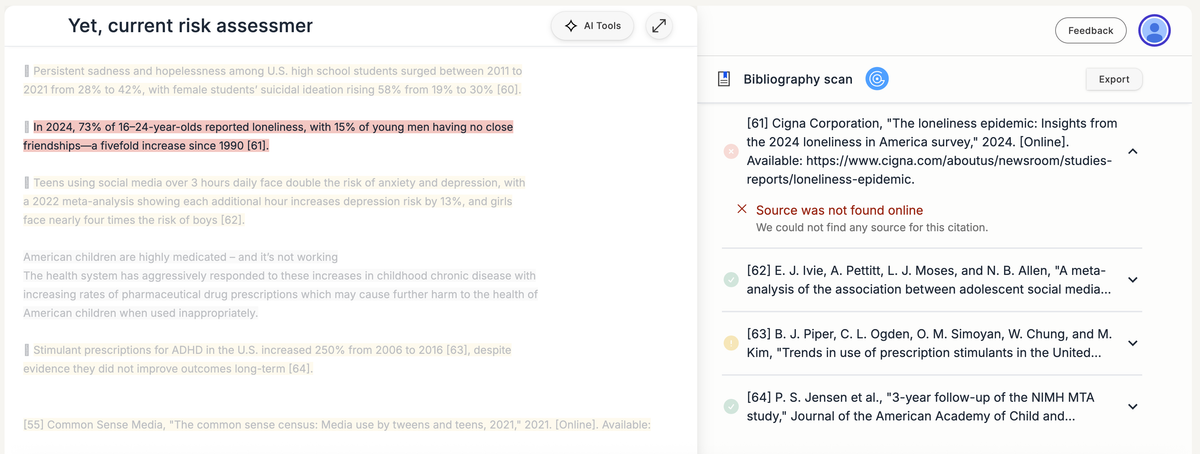

| Title | Average Review Rating | Paper Link | Citation Check Scan Link | Example of Verified Hallucination | Comment |

| TamperTok: Forensics-Driven Tokenized Autoregressive Framework for Image Tampering Localization | 8.0 | TamperTok: Forensics-Driven Tokenized Autoregressive Framework for Image Tampering Localization | OpenReview | https://app.gptzero.me/documents/4645494f-70eb-40bb-aea7-0007e13f7179/share | Chong Zou, Zhipeng Wang, Ziyu Li, Nan Wu, Yuling Cai, Shan Shi, Jiawei Wei, Xia Sun, Jian Wang, and Yizhou Wang. Segment everything everywhere all at once. In Advances in Neural Information Processing Systems (NeurIPS), volume 36, 2023. | This paper exists, but all authors are wrong. |

| MixtureVitae: Open Web-Scale Pretraining Dataset With High Quality Instruction and Reasoning Data Built from Permissive Text Sources | 8.0 | MixtureVitae: Open Web-Scale Pretraining Dataset With High Quality Instruction and Reasoning Data Built from Permissive Text Sources | OpenReview | https://app.gptzero.me/documents/bfd10666-ea2d-454c-9ab2-75faa8b84281/share | Dan Hendrycks, Collin Burns, Steven Basart, Andy Critch, Jerry Li, Dawn Ippolito, Aina Lapedriza, Florian Tramer, Rylan Macfarlane, Eric Jiang, et al. Measuring massive multitask language understanding. In Proceedings of the International Conference on Learning Representations (ICLR), 2021. | The paper and first 3 authors match. The last 7 authors are not on the paper, and some of them do not exist |

| Catch-Only-One: Non-Transferable Examples for Model-Specific Authorization | 6.0 | Catch-Only-One: Non-Transferable Examples for Model-Specific Authorization | OpenReview | https://app.gptzero.me/documents/9afb1d51-c5c8-48f2-9b75-250d95062521/share | Dinghuai Zhang, Yang Song, Inderjit Dhillon, and Eric Xing. Defense against adversarial attacks using spectral regularization. In International Conference on Learning Representations (ICLR), 2020. | No Match |

| OrtSAE: Orthogonal Sparse Autoencoders Uncover Atomic Features | 6.0 | OrtSAE: Orthogonal Sparse Autoencoders Uncover Atomic Features | OpenReview | https://app.gptzero.me/documents/e3f155d7-067a-4720-adf8-65dc9dc714b9/share | Robert Huben, Logan Riggs, Aidan Ewart, Hoagy Cunningham, and Lee Sharkey. Sparse autoencoders can interpret randomly initialized transformers, 2025. URL https://arxiv.org/ abs/2501.17727. | This paper exists, but all authors are wrong. |

| Principled Policy Optimization for LLMs via Self-Normalized Importance Sampling | 5.0 | Principled Policy Optimization for LLMs via Self-Normalized Importance Sampling | OpenReview | https://app.gptzero.me/documents/54c8aa45-c97d-48fc-b9d0-d491d54df8d3/share | David Rein, Stas Gaskin, Lajanugen Logeswaran, Adva Wolf, Oded teht sun, Jackson H. He, Divyansh Kaushik, Chitta Baral, Yair Carmon, Vered Shwartz, Sang-Woo Lee, Yoav Goldberg, C. J. H. un, Swaroop Mishra, and Daniel Khashabi. Gpqa: A graduate-level google-proof q\&a benchmark, 2023 | All authors except the first are fabricated. |

| PDMBench: A Standardized Platform for Predictive Maintenance Research | 4.5 | PDMBench: A Standardized Platform for Predictive Maintenance Research | OpenReview | https://app.gptzero.me/documents/5c55afe7-1689-480d-ac44-9502dc0f9229/share | Andrew Chen, Andy Chow, Aaron Davidson, Arjun DCunha, Ali Ghodsi, Sue Ann Hong, Andy Konwinski, Clemens Mewald, Siddharth Murching, Tomas Nykodym, et al. Mlflow: A platform for managing the machine learning lifecycle. In Proceedings of the Fourth International Workshop on Data Management for End-to-End Machine Learning, pp. 1-4. ACM, 2018. | Authors and conference match this paper, but title is somewhat different and the year is wrong. |

| IMPQ: Interaction-Aware Layerwise Mixed Precision Quantization for LLMs | 4.5 | IMPQ: Interaction-Aware Layerwise Mixed Precision Quantization for LLMs | OpenReview | https://app.gptzero.me/documents/5461eefd-891e-4100-ba1c-e5419af520c0/share | Chen Zhu et al. A survey on efficient deployment of large language models. arXiv preprint arXiv:2307.03744, 2023. | The arXiv ID is real, but the paper has different authors and a different title. |

| C3-OWD: A Curriculum Cross-modal Contrastive Learning Framework for Open-World Detection | 4.5 | C3-OWD: A Curriculum Cross-modal Contrastive Learning Framework for Open-World Detection | OpenReview | https://app.gptzero.me/documents/c07521cd-2757-40a2-8dc1-41382d7eb11b/share | K. Marino, R. Salakhutdinov, and A. Gupta. Fine-grained image classification with learnable semantic parts. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 4500-4509, 2019. | Authors and subject match this paper |

| TopoMHC: Sequence–Topology Fusion for MHC Binding | 4.5 | TopoMHC: Sequence–Topology Fusion for MHC Binding | OpenReview | https://app.gptzero.me/documents/8da4f86c-00d8-4d73-81dd-c168c0bfdf4e/share | Yuchen Han, Yohan Kim, Dalibor Petrovic, Alessandro Sette, Morten Nielsen, and Bjoern Peters. Deepligand: a deep learning framework for peptide-mhc binding prediction. Bioinformatics, 39 (1):btac834, 2023. doi: 10.1093/bioinformatics/btac834. | No Match |

| Can Text-to-Video Models Generate Realistic Human Motion? | 4.5 | Can Text-to-Video Models Generate Realistic Human Motion? | OpenReview | https://app.gptzero.me/documents/f52aad2d-2253-44bf-80ba-8e8668df650f/share | Yugandhar Balaji, Jianwei Yang, Zhen Xu, Menglei Chai, Zhoutong Xu, Ersin Yumer, Greg Shakhnarovich, and Deva Ramanan. Conditional gan with discriminative filter generation for text-to-video synthesis. In Proceedings of the 28th International Joint Conference on Artificial Intelligence (IJCAI), pp. 2155-2161, July 2019. doi: 10.24963/ijcai.2019/276. | This paper exists, but the authors and page numbers are wrong. |

| GRF-LLM: Environment-Aware Wireless Channel Modeling via LLM-Guided 3D Gaussians | 4.0 | GRF-LLM: Environment-Aware Wireless Channel Modeling via LLM-Guided 3D Gaussians | OpenReview | https://app.gptzero.me/documents/c3e66b9c-20b4-4c50-b881-e40aba2a514f/share | Junting Chen, Yong Zeng, and Rui Zhang. Rfcanvas: A radio frequency canvas for wireless network design. In IEEE International Conference on Communications, pp. 1-6, 2024.

| Title partially matches this article. |

| Listwise Generalized Preference Optimization with Process-aware Signals for LLM Reasoning | 4.0 | Listwise Generalized Preference Optimization with Process-aware Signals for LLM Reasoning | OpenReview | https://app.gptzero.me/documents/bbeecf1c-189a-4311-999b-617aab686ea9/share | Kaixuan Zhou, Jiaqi Liu, Yiding Wang, and James Zou. Generalized direct preference optimization. arXiv preprint arXiv:2402.05015, 2024. | No Match |

| IUT-Plug: A Plug-in tool for Interleaved Image-Text Generation | 4.0 | IUT-Plug: A Plug-in tool for Interleaved Image-Text Generation | OpenReview | https://app.gptzero.me/documents/0f12d2fc-403b-4859-8d00-f75fd9f56e39/share | Yash Goyal, Anamay Mohapatra, Nihar Kwatra, and Pawan Goyal. A benchmark for compositional text-to-image synthesis. In Thirty-fifth Conference on Neural Information Processing Systems Datasets and Benchmarks Track (Round 1), 2021. | This paper exists, but the authors are all wrong. |

| Resolving the Security-Auditability Dilemma with Auditable Latent Chain-of-Thought | 4.0 | Resolving the Security-Auditability Dilemma with Auditable Latent Chain-of-Thought | OpenReview | https://app.gptzero.me/documents/5cee5c3a-5e75-4063-a054-1e934a071705/share | Yixiang Ma, Ziyi Liu, Zhaoyu Wang, Zhaofeng Xu, Yitao Wang, and Yang Liu. Safechain: A framework for securely executing complex commands using large language models. arXiv preprint arXiv:2402.16521, 2024a. | No match; although this paper is closely related. |

| ThinkGeo: Evaluating Tool-Augmented Agents for Remote Sensing Tasks | 4.0 | ThinkGeo: Evaluating Tool-Augmented Agents for Remote Sensing Tasks | OpenReview | https://app.gptzero.me/documents/f3441445-5401-48e9-9617-09a635992ff9/share | Yunzhu Yang, Shuang Li, and Jiajun Wu. MM-ReAct: Prompting chatgpt to multi-modal chain-ofthought reasoning. arXiv preprint arXiv:2401.04740, 2024. | No Match |

| Taming the Judge: Deconflicting AI Feedback for Stable Reinforcement Learning | 3.5 | Taming the Judge: Deconflicting AI Feedback for Stable Reinforcement Learning | OpenReview | https://app.gptzero.me/documents/80c64df2-eee6-41aa-90cc-3f835b128747/share | Chenglong Wang, Yang Liu, Zhihong Xu, Ruochen Zhang, Jiahao Wu, Tao Luo, Jingang Li, Xunliang Liu, Weiran Qi, Yujiu Yang, et al. Gram-r ${ }^{8}$ : Self-training generative foundation reward models for reward reasoning. arXiv preprint arXiv:2509.02492, 2025b. | All authors except the first are fabricated and the title is altered. |

| DANCE-ST: Why Trustworthy AI Needs Constraint Guidance, Not Constraint Penalties | 3.5 | DANCE-ST: Why Trustworthy AI Needs Constraint Guidance, Not Constraint Penalties | OpenReview | https://app.gptzero.me/documents/3ebd71b4-560d-4fa3-a0d3-ed2fa13c519f/share | Sardar Asif, Saad Ghayas, Waqar Ahmad, and Faisal Aadil. Atcn: an attention-based temporal convolutional network for remaining useful life prediction. The Journal of Supercomputing, 78(1): $1-19,2022$. | Two papers with similar titles exist here and here, but the authors, journal, and date do not match. |

| Federated Hierarchical Anti-Forgetting Framework for Class-Incremental Learning with Large Pre-Trained Models | 3.33 | Federated Hierarchical Anti-Forgetting Framework for Class-Incremental Learning with Large Pre-Trained Models | OpenReview | https://app.gptzero.me/documents/ae10437b-c65b-455b-ad22-918742a5ed82/share | Arslan Chaudhry, Arun Mallya, and Abhinav Srivastava. Fedclassil: A benchmark for classincremental federated learning. In NeurIPS, 2023. | No Match |

| Chain-of-Influence: Tracing Interdependencies Across Time and Features in Clinical Predictive Modeling | 3.33 | Chain-of-Influence: Tracing Interdependencies Across Time and Features in Clinical Predictive Modeling | OpenReview | https://app.gptzero.me/documents/dff2c063-6986-4241-8c20-4327a39d4d4b/share | Ishita et al. Bardhan. Icu length-of-stay prediction with interaction-based explanations. Journal of Biomedical Informatics, 144:104490, 2024. | No Match |

| TRACEALIGN - Tracing the Drift: Attributing Alignment Failures to Training-Time Belief Sources in LLMs | 3.33 | TRACEALIGN - Tracing the Drift: Attributing Alignment Failures to Training-Time Belief Sources in LLMs | OpenReview | https://app.gptzero.me/documents/4b379aba-8d8a-427b-ac67-d13af5eda8c9/share | Lisa Feldman Barrett. Emotions are constructed: How brains make meaning. Current Directions in Psychological Science, 25(6):403-408, 2016. | This article is similar, but the title, and metadata are different. |

| MEMORIA: A Large Language Model, Instruction Data and Evaluation Benchmark for Intangible Cultural Heritage | 3.33 | MEMORIA: A Large Language Model, Instruction Data and Evaluation Benchmark for Intangible Cultural Heritage | OpenReview | https://app.gptzero.me/documents/956129a3-11ee-4503-92e3-3ed5db12d2d6/share | Yang Cao, Rosa Martinez, and Sarah Thompson. Preserving indigenous languages through neural language models: Challenges and opportunities. Computational Linguistics, 49(3):567-592, 2023. | No Match |

| Reflexion: Language Models that Think Twice for Internalized Self-Correction | 3.2 | Reflexion: Language Models that Think Twice for Internalized Self-Correction | OpenReview | https://app.gptzero.me/documents/45f2f68d-df09-4bbf-8513-588fe24f26fa/share | Guang-He Xiao, Haolin Wang, and Yong-Feng Zhang. Rethinking uncertainty in llms: A case study on a fact-checking benchmark. arXiv preprint arXiv:2305.11382, 2023. | No Match |

| ECAM: Enhancing Causal Reasoning in Foundation Models with Endogenous Causal Attention Mechanism | 3.0 | ECAM: Enhancing Causal Reasoning in Foundation Models with Endogenous Causal Attention Mechanism | OpenReview | https://app.gptzero.me/documents/d99a5552-38e0-459b-8746-4e64069b0640/share | Atticus Geiger, Zhengxuan Wu, Yonatan Rozner, Mirac Suzgun Naveh, Anna Nagarajan, Jure Leskovec, Christopher Potts, and Noah D Goodman. Causal interpretation of self-attention in pre-trained transformers. In Advances in Neural Information Processing Systems 36 (NeurIPS 2023), 2023. URL https://proceedings.neurips.cc/paper_files/paper/ 2023/file/642a321fba8a0f03765318e629cb93ea-Paper-Conference.pdf. | A paper with this title exists at the given URL, but the authors don't match. |

| MANTA: Cross-Modal Semantic Alignment and Information-Theoretic Optimization for Long-form Multimodal Understanding | 3.0 | MANTA: Cross-Modal Semantic Alignment and Information-Theoretic Optimization for Long-form Multimodal Understanding | OpenReview | https://app.gptzero.me/documents/381ed9a6-b168-4cd0-81ad-1f50139c0737/share | Guy Dove. Language as a cognitive tool to imagine goals in curiosity-driven exploration. Nature Communications, 13(1):1-14, 2022. | An article with this title exists, but author and publication don't match. |

| LOSI: Improving Multi-agent Reinforcement Learning via Latent Opponent Strategy Identification | 3.0 | LOSI: Improving Multi-agent Reinforcement Learning via Latent Opponent Strategy Identification | OpenReview | https://app.gptzero.me/documents/53e86e4b-a7e2-48d0-976b-240bfc412836/share | Jing Liang, Fan Zhou, Shuying Li, Jun Chen, Guandong Zhou, Huaiming Xu, and Xin Li. Learning opponent behavior for robust cooperation in multi-agent reinforcement learning. IEEE Transactions on Cybernetics, 53(12):7527-7540, 2023. | No Match |

| The Dynamic Interaction Field Transformer: A Universal, Tokenizer-Free Language Architecture | 3.0 | The Dynamic Interaction Field Transformer: A Universal, Tokenizer-Free Language Architecture | OpenReview | https://app.gptzero.me/documents/80fd90a6-c99e-4c31-af72-0da9e90949f6/share | Kaj Bostrom and Greg Durrett. Byte-level representation learning for multi-lingual named entity recognition. Proceedings of the 2020 Conference on Empirical Methods in Natural Language Processing (EMNLP), pp. 4617-4627, 2020. | No Match |

| Strategema: Probabilistic Analysis of Adversarial Multi-Agent Behavior with LLMs in Social Deduction Games | 3.0 | Strategema: Probabilistic Analysis of Adversarial Multi-Agent Behavior with LLMs in Social Deduction Games | OpenReview | https://app.gptzero.me/documents/1155e8a8-f679-4942-8fd9-c47fb64ad967/share | Tom Eccles, Jeffrey Tweedale, and Yvette Izza. Let's pretend: A study of negotiation with autonomous agents. In 2009 IEEE/WIC/ACM International Joint Conference on Web Intelligence and Intelligent Agent Technology (WI-IAT), volume 3, pp. 449-452. IEEE, 2009. | No Match |

| Understanding Transformer Architecture through Continuous Dynamics: A Partial Differential Equation Perspective | 3.0 | Understanding Transformer Architecture through Continuous Dynamics: A Partial Differential Equation Perspective | OpenReview | https://app.gptzero.me/documents/460a1a23-1a97-482a-9759-ade855a4a0b4/share | Zijie J Wang, Yuhao Choi, and Dongyeop Wei. On the identity of the representation learned by pre-trained language models. arXiv preprint arXiv:2109.01819, 2021. | No Match |

| Diffusion Aligned Embeddings | 2.8 | Diffusion Aligned Embeddings | OpenReview | https://app.gptzero.me/documents/3d95a003-06c6-4233-881b-03b1e29b4ba2/share | Yujia Wang, Hu Huang, Cynthia Rudin, and Yaron Shaposhnik. Pacmap: Dimension reduction using pairwise controlled manifold approximation projection. Machine Learning, 110:559-590, 2021. | A similar paper with two matching authors exists, but the other authors, title, and journal are wrong. |

| Leveraging NLLB for Low-Resource Bidirectional Amharic – Afan Oromo Machine Translation | 2.5 | Leveraging NLLB for Low-Resource Bidirectional Amharic – Afan Oromo Machine Translation | Open Review | https://app.gptzero.me/documents/813da6e2-f7e8-4c95-bdd8-7d29b8e4b641/share | Atnafa L. Tonja, Gebremedhin Gebremeskel, and Seid M. Yimam. Evaluating machine translation systems for ethiopian languages: A case study of amharic and afan oromo. Journal of Natural Language Engineering, 29(3):456-478, 2023. | No Match |

| Certified Robustness Training: Closed-Form Certificates via CROWN | 2.5 | Certified Robustness Training: Closed-Form Certificates via CROWN | OpenReview | https://app.gptzero.me/documents/53b60ef5-2ebf-403e-8123-3a9bb2da0f33/share | Huan Zhang, Hongge Chen, Chaowei Xiao, and Bo Zhang. Towards deeper and better certified defenses against adversarial attacks. In International Conference on Learning Representations, 2019. URL https://openreview.net/forum?id=rJgG92A2m | No Match |

| Context-Aware Input Switching in Mobile Devices: A Multi-Language, Emoji-Integrated Typing System | 2.5 | Context-Aware Input Switching in Mobile Devices: A Multi-Language, Emoji-Integrated Typing System | OpenReview | https://app.gptzero.me/documents/68998766-49c3-4269-9eca-3b6a76ed68b4/share | Ishan Tarunesh, Syama Sundar Picked, Sai Krishna Bhat, and Monojit Choudhury. Machine translation for code-switching: A systematic literature review. In Proceedings of the 59th Annual Meeting of the Association for Computational Linguistics, pp. 3654-3670, 2021. | Partial match to this article, but authors, title, and metadata is largely wrong. |

| Five-Mode Tucker-LoRA for Video Diffusion on Conv3D Backbones | 2.5 | Five-Mode Tucker-LoRA for Video Diffusion on Conv3D Backbones | OpenReview | https://app.gptzero.me/documents/eb0fd660-ed00-4769-a940-3d093d4f1ec1/share | Shengming Chen, Yuxin Wang, et al. Videocrafter: Open diffusion models for high-quality video generation. arXiv preprint arXiv:2305.07932, 2023b. | A paper with the same title exists, but the authors and arXiv ID are wrong. |

| Activation-Guided Regularization: Improving Deep Classifiers using Feature-Space Regularization with Dynamic Prototypes | 2.5 | Activation-Guided Regularization: Improving Deep Classifiers using Feature-Space Regularization with Dynamic Prototypes | OpenReview | https://app.gptzero.me/documents/4031111e-24ef-4e06-908e-18ab99b08932/share | Wentao Cheng and Tong Zhang. Improving deep learning for classification with unknown label noise. In International Conference on Machine Learning, pp. 6059-6081. PMLR, 2023. | A similar paper exists. |

| Sparse-Smooth Decomposition for Nonlinear Industrial Time Series Forecasting | 2.5 | Sparse-Smooth Decomposition for Nonlinear Industrial Time Series Forecasting | OpenReview | https://app.gptzero.me/documents/c01ad49e-a788-4916-a6ee-f43314d14676/share | Yutian Chen, Kun Zhang, Jonas Peters, and Bernhard Schölkopf. Causal discovery and inference for nonstationary systems. Journal of Machine Learning Research, 22(103):1-72, 2021. | No Match |

| PDE-Transformer: A Continuous Dynamical Systems Approach to Sequence Modeling | 2.0 | PDE-Transformer: A Continuous Dynamical Systems Approach to Sequence Modeling | OpenReview | https://app.gptzero.me/documents/ba257eea-e86c-4276-84c0-08b7465e1e3e/share |

Xuechen Li, Juntang Zhuang, Yifan Ding, Zhaozong Jin, Yun chen Chen, and Stefanie Jegelka. Scalable gradients for stochastic differential equations. In Proceedings of the Twenty Third International Conference on Artificial Intelligence and Statistics (AISTATS 2020), volume 108 of Proceedings of Machine Learning Research, pp. 3898-3908, 2020. | The paper exists and the first author is correct but all other authors and the page range are wrong |

| SAFE-LLM: A Unified Framework for Reliable, Safe, And Secure Evaluation of Large Language Models | 2.0 | SAFE-LLM: A Unified Framework for Reliable, Safe, And Secure Evaluation of Large Language Models | OpenReview | https://app.gptzero.me/documents/05ee7ff4-40e2-48b7-b5bd-8c307d7db669/share | Kuhn, J., et al. Semantic Entropy for Hallucination Detection. ACL 2023. | A similar paper with different authors can be found here. |

| PIPA: An Agent for Protein Interaction Identification and Perturbation Analysis | 2.0 | PIPA: An Agent for Protein Interaction Identification and Perturbation Analysis | OpenReview | https://app.gptzero.me/documents/5031a806-1271-4fd3-b333-2554f47cb9fa/share | Alex Brown et al. Autonomous scientific experimentation at the advanced light source using language-model-driven agents. Nature Communications, 16:7001, 2025. | No Match |

| Typed Chain-of-Thought: A Curry-Howard Framework for Verifying LLM Reasoning | 2.0 | Typed Chain-of-Thought: A Curry-Howard Framework for Verifying LLM Reasoning | OpenReview | https://app.gptzero.me/documents/9d2e3239-99db-4712-be7f-e032156d92a5/share | DeepMind. Gemma scope: Scaling mechanistic interpretability to chain of thought. DeepMind Safety Blog, 2025. URL https://deepmindsafetyresearch.medium.com/ evaluating-and-monitoring-for-ai-scheming-8a7f2ce087f9. Discusses scaling mechanistic interpretability techniques to chain-of-thought and applications such as hallucination detection. | ThA similar URL exists, and the title is similar to this blog. However, no exact match exists. |

| Graph-Based Operator Learning from Limited Data on Irregular Domains | 2.0 | Graph-Based Operator Learning from Limited Data on Irregular Domains | OpenReview | https://app.gptzero.me/documents/6c52217f-fb88-4bd8-85aa-bd546e1fa88c/share | Liu, Y., Lütjens, B., Azizzadenesheli, K., and Anandkumar, A. (2022). U-netformer: A u-net style transformer for solving pdes. arXiv preprint arXiv:2206.11832. | No Match |

| KARMA: Knowledge-Aware Reward Mechanism Adjustment via Causal AI | 2.0 | KARMA: Knowledge-Aware Reward Mechanism Adjustment via Causal AI | OpenReview | https://app.gptzero.me/documents/92b6492c-68ad-41a3-ae35-628d67f053e0/share | Reinaldo A. C. Bianchi, Luis A. Celiberto Jr, and Ramon Lopez de Mantaras. Knowledge-based reinforcement learning: A survey. Journal of Artificial Intelligence Research, 62:215-261, 2018. | No Match |

| Microarchitecture Is Destiny: Performance and Accuracy of Quantized LLMs on Consumer Hardware | 2.0 | Microarchitecture Is Destiny: Performance and Accuracy of Quantized LLMs on Consumer Hardware | OpenReview | https://app.gptzero.me/documents/4504a39a-af72-41ab-9679-6f6a017a3275/share | Zhihang Jiang, Dingkang Wang, Yao Li, et al. Fp6-llm: Efficient llm serving through fp6-centric co-design. arXiv preprint arXiv:2401.14112, 2024. | the arXiv ID corresponds with a very similar paper, but the authors are wrong and the title is altered. |

| Decoupling of Experts: A Knowledge-Driven Architecture for Efficient LLMs | 1.6 | Decoupling of Experts: A Knowledge-Driven Architecture for Efficient LLMs | OpenReview | https://app.gptzero.me/documents/74eade70-da36-4635-8749-5e1d04748b6d/share | H Zhang, Y L, X W, Y Z, X Z, H W, X H, K G, Z W, H W, H C, H L, and J W. Matrix data pile: A trillion-tokenscale datasets for llm pre-training. arXiv preprint arXiv:2408.12151, 2024. | No Match; arxiv is is unrelated |

| QUART: Agentic Reasoning To Discover Missing Knowledge in Multi-Domain Temporal Data. | 1.5 | QUART: Agentic Reasoning To Discover Missing Knowledge in Multi-Domain Temporal Data. | OpenReview | https://app.gptzero.me/documents/c6f30343-3948-4c07-b7de-6b1407d5daa6/share | Meera Jain and Albert Chen. Explainable ai techniques for medical applications: A comprehensive review. AI in Healthcare, 5:22-37, 2024. | No Match |

| From Physics-Informed Models to Deep Learning: Reproducible AI Frameworks for Climate Resilience and Policy Alignment | 1.5 | From Physics-Informed Models to Deep Learning: Reproducible AI Frameworks for Climate Resilience and Policy Alignment | OpenReview | https://app.gptzero.me/documents/a7ed6c42-4349-4b45-a356-0e325090e5af/share | MIT Climate Group. A cautionary tale for deep learning in climate science. https://example. com, 2019. | The title matches this paper, but the citation is obviously hallucinated. |

| A superpersuasive autonomous policy debating system | 1.5 | A superpersuasive autonomous policy debating system | OpenReview | https://app.gptzero.me/documents/b792a4de-baa8-47d4-b880-87b330a482ce/share | Roy Bar-Haim, Shachar Bhattacharya, Michal Jacovi, Yosi Mass, Matan Orbach, Eyal Sliwowicz, and Noam Slonim. Key point analysis via contrastive learning and extractive argument summarization. In Proceedings of the 2021 Conference on Empirical Methods in Natural Language Processing, pages 7953-7962, Online and Punta Cana, Dominican Republic, November 2021a. Association for Computational Linguistics. doi: 10.18653/v1/2021.emnlp-main.629. URL https://aclanthology.org/2021.emnlp-main. 629. | A paper with the same title exists, but the authors and URL are wrong. |

| AnveshanaAI: A Multimodal Platform for Adaptive AI/ML Education Through Automated Question Generation and Interactive Assessment | 1.5 | AnveshanaAI: A Multimodal Platform for Adaptive AI/ML Education Through Automated Question Generation and Interactive Assessment | OpenReview | https://app.gptzero.me/documents/720d6d24-2223-4e0e-95b9-6dfce674f8c7/share | Shiyang Liu, Hongyi Xu, and Min Chen. Measuring and reducing perplexity in large-scale llms. arXiv preprint arXiv:2309.12345, 2023. | No Match |

| AI-Assisted Medical Triage Assistant | 1.0 | AI-Assisted Medical Triage Assistant | OpenReview | https://app.gptzero.me/documents/391b5d76-929a-4f3f-addf-31f6993726f2/share | [3] K. Arnold, J. Smith, and A. Doe. Variability in triage decision making. Resuscitation, 85:12341239, 2014. | No Match |

| Deciphering Cross-Modal Feature Interactions in Multimodal AIGC Models: A Mechanistic Interpretability Approach | 0.67 | Deciphering Cross-Modal Feature Interactions in Multimodal AIGC Models: A Mechanistic Interpretability Approach | OpenReview | https://app.gptzero.me/documents/d4102812-01c4-45b2-aea8-59e467d31fd4/share | Shuyang Basu, Sachin Y Gadre, Ameet Talwalkar, and Zico Kolter. Understanding multimodal llms: the mechanistic interpretability of llava in visual question answering. arXiv preprint arXiv:2411.17346, 2024. | A paper with this title exists, but the authors and arXiv ID are wrong. |

| Scalable Generative Modeling of Protein Ligand Trajectories via Graph Neural Diffusion Networks | 0.5 | Scalable Generative Modeling of Protein Ligand Trajectories via Graph Neural Diffusion Networks | OpenReview | https://app.gptzero.me/documents/32d43311-6e69-4b88-be99-682e4eb0c2cc/share | E. Brini, G. Jayachandran, and M. Karplus. Coarse-graining biomolecular simulations via statistical learning. J. Chem. Phys., 154:040901, 2021. | There is no match for the title and authors, but the journal, volume, and year match this article |