How to check if a student used AI (Teacher’s Guide)

Check if a student used AI with proven methods: analyze drafts, run detectors like GPTZero, and identify writing inconsistencies step by step.

Just a few years ago, plagiarism typically referred to copying from a classmate. But what do we do when AI tools have become the new classmates? These tools mean students can instantly generate essays and a recent survey found 59% of students surveyed using ChatGPT for schoolwork, up from 52% last year.

When students rely on AI too heavily, they risk the deterioration of their critical thinking skills as well as their own writing voice. This is why it’s essential for educators to know exactly how to tell if something is written by AI. Below, we’ll cover how to check if a student used AI and what signs to look for when you find yourself questioning, “Did ChatGPT write this?”

- Start with a baseline

- Run a scan

- Compare against their past work

- Talk to the student

- Verify sources

- Triangulate before you conclude

Challenges of AI Use in the Classroom

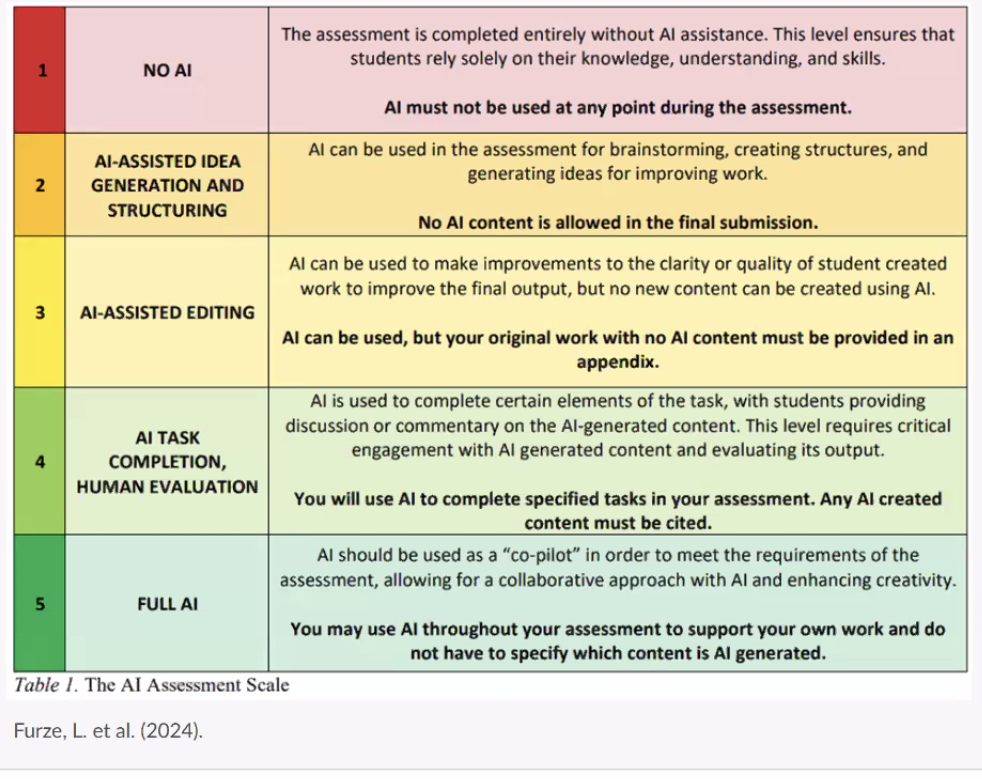

One of the main challenges of AI use in the classroom is that we don’t yet have universal rules for how the tools should be used. While some schools have started experimenting with policies, most haven’t caught up yet, which leaves individual teachers to make the call. In the absence of firm guidelines, students can easily cross boundaries they might not have even known were there, especially as different departments are trying out their own comfort levels with the AI Assessment scale.

Detection also brings another challenge, as we explored in our popular Teaching Responsibly with AI webinar series with Antonio Byrd. Too many educators, he argued, are falling into “surveillance pedagogy”: leading with suspicion and monitoring for misconduct as a starting point. “I don’t want to be raising an eyebrow at my students all the time, tracking and scrutinising everything they do throughout the writing process,” he said. “That’s not the relationship I want.”

AI models are updating all the time, making it harder to decipher what’s been generated by machines and what’s been written by humans. The risk goes beyond academic dishonesty: when students lean too heavily on AI, they miss out on building their intellectual muscles, which is the core purpose education is meant to serve. For teachers, this means finding their way through an ever-shifting and high-stakes landscape while already juggling heavy, time-intensive workloads.

Steps to check if a students used AI

1) Start with a baseline

It’s easy to assume that the first step is to run a scan, but actually, it’s about knowing your students. Before you even think about running an AI scan, try to take a ‘snapshot’ of each student’s natural writing voice.

An easy way to do this is to have them write a short piece in class, without devices or outside help, which can then serve as a snapshot of their style with the quirks and patterns that come when they write freely.

Keep this alongside a couple of earlier assignments, so you have something to compare against later if a submission ever feels out of character.

As you review these samples, pay attention to patterns: maybe they often misspell a certain word, or use British and American spelling interchangeably, or default to simple (or rambling) sentence structures, or have limited (or adventurous) vocabulary.

2) If something feels “off”, run a scan as a conversation starter.

Sometimes you’ll read a piece of writing and something just won’t sit right. The tone feels too abruptly formal, or the ideas seem oddly generic.

When that happens, it’s worth running the work through an AI detector simply to gather more information. The key is to treat the scan as a starting point. Detection tools are most effective when they’re part of an ongoing conversation, where students don’t feel like they’re trying to be “caught”.

Maybe they used AI to brainstorm ideas but wrote the essay themselves, or they relied on a grammar tool but genuinely didn’t realise it crossed a line. A strong detector (such as GPTZero) will do more than spit out a percentage score: it will highlight specific sentences that might be AI-generated and explain why they were flagged.

Context matters. It helps you spot exactly where the concerns are and gives you something tangible to discuss with the student. Alongside this, open the Writing Report or version history, and look at how the piece came together over time.

3) Compare against their past work.

Once you’ve run a scan, zoom out and look at the bigger picture. Compare the piece to what the student has turned in before. Does it sound like them with their usual phrasing, their natural rhythm – or does something feel off? Maybe the vocabulary has taken a sudden leap or their typical hallmarks have completely disappeared.

There’s a difference between a student based on a gut feeling, versus noticing when something no longer sounds like them. Comparing past work gives you crucial context and helps you distinguish between genuine improvement and a piece that’s been artificially smoothed out by AI.

For example, AI text often sounds correct but wooden: watch for too much passive voice, overuse of vague pronouns like “it” and “they”, a refusal to use contractions (“cannot”, “do not”), and the same sentence structure on repeat.

4) Actually talk to the student.

If you still have concerns after comparing their work, take the time to speak with the student directly, and keep the tone calm and supportive. After all, help them to see that this isn’t an interrogation, but instead, a chance to understand their process, which can reveal a lot about how the piece came together.

Ask open and straightforward questions and have them walk you through why they chose a particular source, or describe how they moved from an outline to a finished draft. It’s normal for students to feel nervous and therefore forget small details, especially if some time has passed since they wrote it.

But if they consistently struggle to explain their choices or seem genuinely confused about core parts of their own work, that’s a strong sign the writing may not be entirely theirs.

5) Verify sources.

Next, don’t forget to look closely at the sources a student has used. AI tools are notorious for fabricating citations that look convincing but don’t actually exist. Start by spot-checking a few of the references.

First and most obviously: do the publications exist? Can you find the exact quote in the original text? Does the source actually support the argument the student is making?

Watch out for citations that seem too perfect, as even when the sources are real, AI tools can misattribute quotes or take information out of context, creating a misleading impression of research.

For recent facts, double-check the timing, as many AI models are trained on older datasets, meaning they often stumble when writing about current events or up-to-date research. If the essay confidently cites very recent studies or statistics but you can’t verify them, that’s a red flag.

6) Triangulate before you conclude

Now that you’ve done the above, step back and look at the whole picture. Pull together the process evidence (drafts with timestamps and version history) alongside the detector’s highlights, your style comparison with past work, notes from your conversation with the student, and any source checks. Remember, patterns matter more than any single red flag.

Consider benign explanations, e.g., a jump in polish might come from assistive grammar tools or a student who’s been practising hard, especially for learners with English as an additional language.

Most of all, keep your professional judgement at the centre. If several indicators align, choose a proportionate response (such as an in-class rewrite). If they don’t, share what reassured you and use the moment to reiterate the AI policy in your classroom.

Strategies To Combat AI-Generated Content

The best way to cut down on AI misuse is to make assignments and classroom culture less vulnerable in the first place, which can be done with the following strategies.

Set Firm Policies

Begin with a written AI policy which lets students know exactly what’s okay and what’s not, whether that’s using AI to brainstorm ideas or clean up grammar, versus handing in something completely generated by a tool.

Be as upfront as possible about what you’ll need from them, such as drafts or source notes. When the rules are well-known and consistent, students are far less likely to cross a line by accident.

Design Assignments AI Can’t Do

The more personal and specific the assignment, the harder it is for AI to take over. A few examples could be journals that ask for reflection, fieldwork reports, class debates, or real-world projects, as a few examples.

If the work requires a student to reflect and articulate their own personal insights or experience or what they’ve learned in class, it’s much less possible for them to outsource.

Use a Mix of Approaches (Including Writing Report)

Try combining a mixture of in-class writing and drafts submitted in stages, as well as short conversations or defences about the work. This makes it almost impossible to hand in something entirely written by AI, and also gives you a window into how each student develops their ideas over time.

You can also check out GPTZero’s Writing Report, to see a replay of how a piece of writing was created: it brings your Google Docs writing process to life, showing exactly how a piece was written.

GPTZero Writing Replay Demo

Role of GPTZero in Detecting AI-Written Content

GPTZero was built with educators in mind and is part of a bigger movement toward responsible AI use in education. When you run a scan, GPTZero goes beyond showing a score or a percentage and instead highlights specific sentences that might be AI-generated and explains why, helping you make an informed judgement. It’s also designed to work smoothly with the tools you already use, like Google Docs and Moodle, for example.

After the scan, you can dive deeper with a Writing Report, which shows how a piece of writing evolved over time. This can reveal whether a student’s work was built up through genuine drafting and revision and you can more easily spot what and what isn’t AI-written content. Many teachers use this to support the growth of their students, helping them reflect on their writing process and build better habits.

For instance, as we’ve covered in a previous GPTZero post, Eddie del Val, who teaches composition at Mt. Hood Community College in Oregon, brought AI right into the learning process. Students could use it to brainstorm, revise, and explore ideas, but the voice, structure, and substance had to be theirs. GPTZero became a checkpoint in the process, and a way to reflect as opposed to simply detect.

GPTZero is about building a healthier culture around AI in the classroom, and helping to have more open conversations about what responsible AI use looks like, without adding to a climate of fear or punishment. We believe in using AI detectors as a guide, while keeping your own judgement and personal knowledge of the student at the heart of every decision.

Conclusion

AI is changing the way students write and learn, which is sometimes exciting, and at other times, seems to make teaching a lot more complicated. Educators are all figuring this out in real time. The key thing isn’t to “catch” students. Instead, it’s to understand them. By knowing their writing voice and using tools like GPTZero to keep the dialogue open, you can help students grow as writers while protecting the integrity of your classroom.

FAQ

What type of content can be checked?

GPTZero can scan a wide range of writing, including essays, research papers, homework submissions, and creative writing. It works well across different formats and is especially designed with educators in mind.

How accurate is GPTZero compared to other tools?

While no detector is 100% perfect, GPTZero was built to minimise false positives, especially for English language learners. It’s regularly updated to keep up with the latest AI models, like GPT-5 and Claude, so you get the most reliable results possible.

Can GPTZero also detect plagiarism?

Yes, GPTZero can help you spot plagiarism and missing citations, so you can tell the difference between AI-generated content and old-fashioned copying. This can make it easier to understand exactly what kind of issue you’re dealing with.