Teaching Responsibly with AI, Webinar #3: Using AI Effectively

Hundreds of educators joined us for the third webinar in our Teaching Responsibly with AI webinar series.

For our final webinar in GPTZero's Teaching Responsibly with AI series, we were thrilled to host Manal Saleh. She reminded us: “Technology is going to augment education delivery, and it's not going to replace the human connections that we establish with our learners.” That philosophy underpinned the session, which explored what responsible AI use looks like, as well as how educators can model it inside the syllabus and within the way they teach.

Meet the Speaker: Manal Saleh

Manal Saleh is a faculty member at the School of Business and Creative Industries, the Nova Scotia Community College. Saleh brings over 17 years of experience in education and has focused extensively on the responsible use of AI in the classroom. She has developed innovative educational modules guiding students on appropriate AI use and has created course-specific AI assistants to support learning. Her practical approach makes her an ideal guide for implementing AI effectively in educational settings.

Practical Strategies for Responsible AI Use

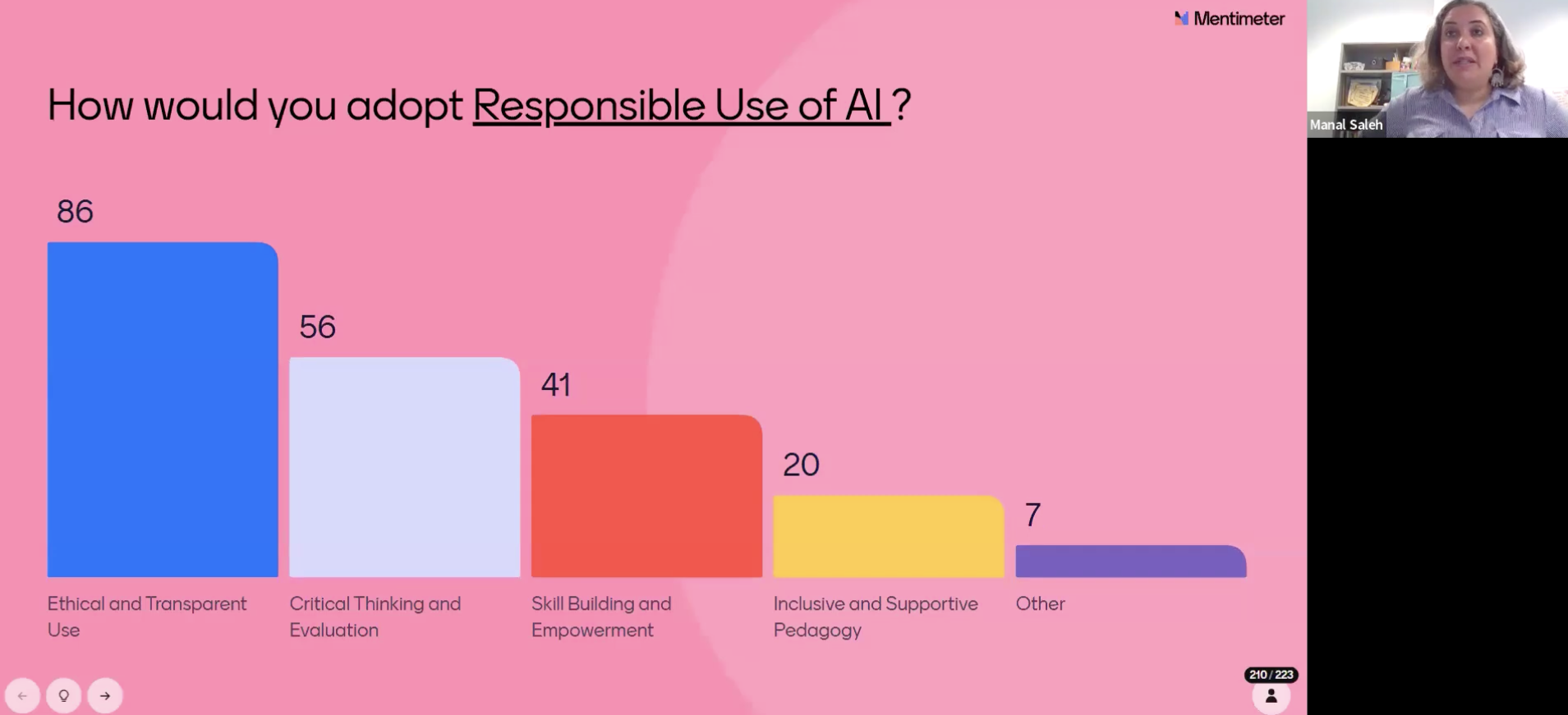

Saleh launched the session with a question: What does responsible use of AI mean to you?

“Take 20 seconds,” she said. “Type your definition of responsible use into the chat but don’t hit enter yet. Let’s not influence one another’s thinking.”

When the chat did explode, responses included “not replacing learning”, “ethical and social considerations”, “as a collaborator”. She used a QR code to show the composition of the first 50 responses:

Saleh describes her own journey into responsible AI use as starting with “very baby steps.” She didn’t begin with a big strategy; instead, she experimented slowly, learning as she went. Now, she’s sharing what she’s learned and her focus is on building skills and encouraging ethical, transparent use of AI.

“I focus on these two areas,” she explains, “because as much as we post-secondary educators are concerned about academic integrity, students are also concerned about academic integrity.” Research backs this up: students need to understand what counts as appropriate use versus cheating for their specific context. They want clear and concise guidelines and policies to ensure that ethical and fair use of AI is in place.

“Students are looking at us,” she says, “expecting that we, as faculty, will support them.”

How She’s Doing It: Layers of Responsible AI Use

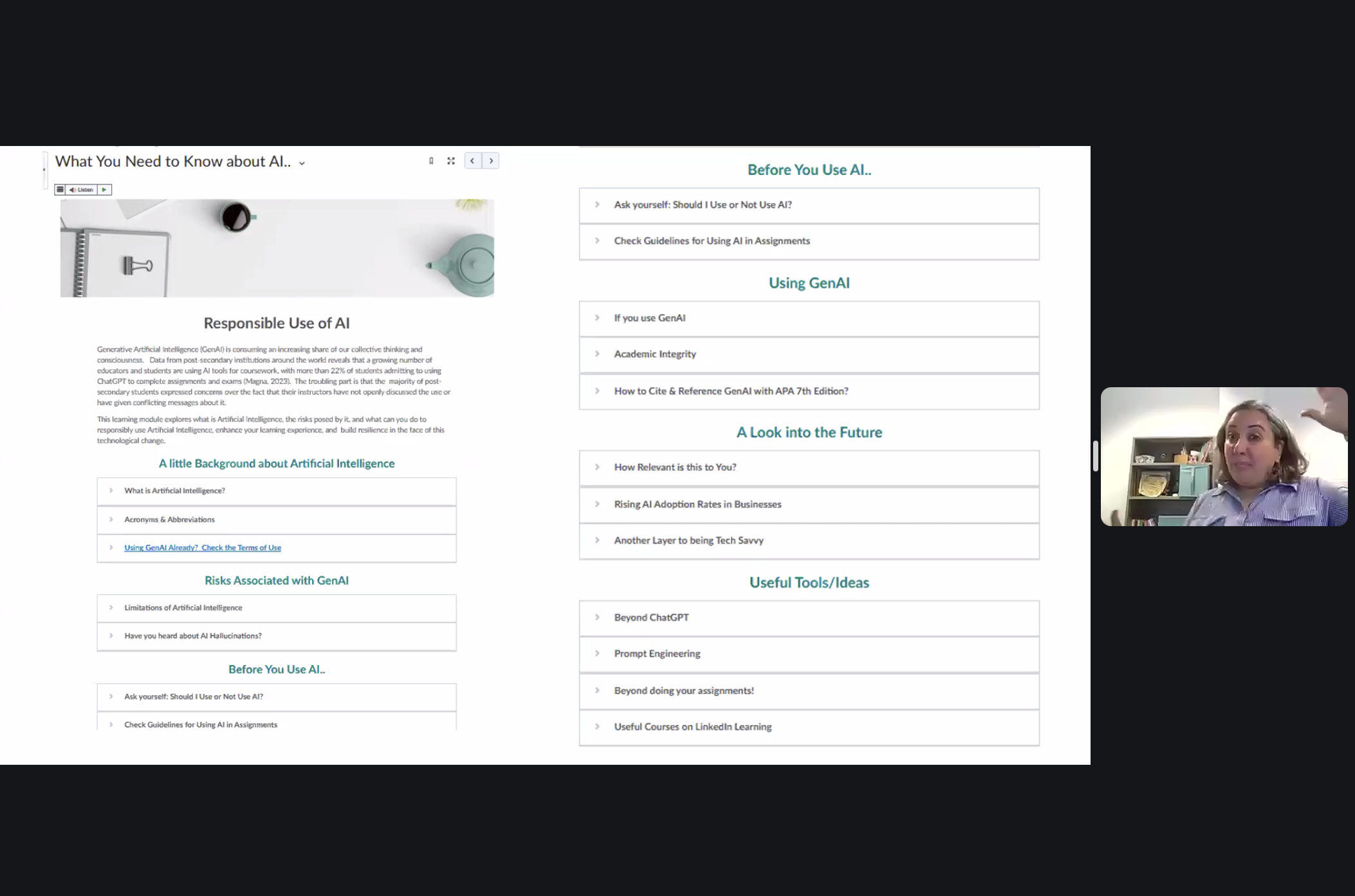

1. Required Learning Module on AI

At the start of each term, students must complete a Brightspace module before accessing the rest of the course materials.

“It’s not optional,” she explained. “I obligate them to do it during orientation week. I want them to build a knowledge base before the assignments start piling up.”

This module includes:

- What AI is (and isn’t): "It’s just a statistical model. It predicts words based on probability."

- Common limitations, like hallucinations

- Prompt engineering 101

- Clear examples of acceptable vs unacceptable use

- How to cite AI tools

- An overview of AI adoption in industry

“We’re not ditching academic integrity and we're not ditching referencing,” she stressed. “We’re saying: if you use it, cite it. Just like any other source.”

She includes examples like:

- Do you want to help get started on assignments? Yes, you can use it.

- Do you want to improve what you've already done? Yeah, sure, no problem. That's improving quality.

- You want to explain an idea in simpler terms or in a different way, or you wrote it in your mother tongue and you want it to be professionally expressed in a different language? Yes, you can do that. You can ask your teacher and check the handbook to ensure that this type of use is acceptable.

- Is it going to fully complete the assignment? No, no, no.

- Or help with research and find codes and resources? Then no, no, no. (At the time this was published, AI had high levels of hallucinations.)

2. Assignment-Level Guidelines

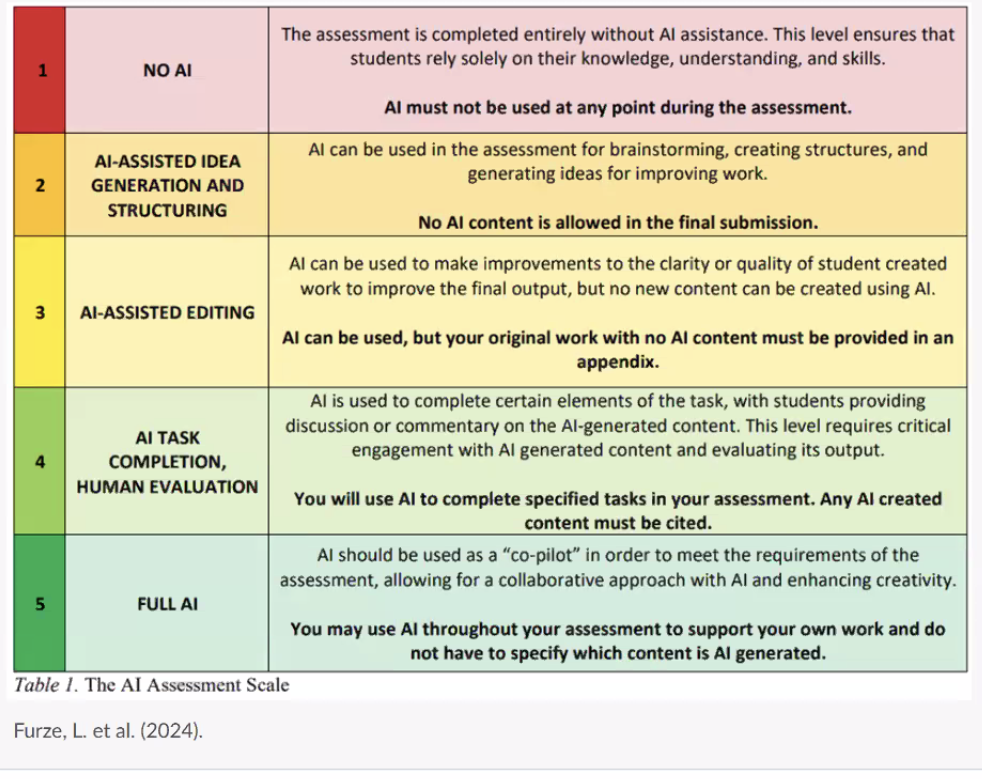

She shared how she adapted an AI assessment and evaluation scale developed by Leon Furze in Australia. “He is a renowned AI expert, and he does a lot of research in education, the impact of education on technology. And they came up with this assessment scale that provides preliminary guidelines on what you would allow students to use.”

“When I started doing that, I just cut the strip of Furze’s assessment scale (just the strip) and I put it in the assignment. And I used to say, this assignment is Code Yellow. This assignment is Code Red. And they knew what Code Yellow and Code Red meant. And it opens up conversation between the faculty and the students, of course.”

She added, “As long as you provide clear guidelines, you're good. That's what students are looking for.”

3. Modelling Responsible Use

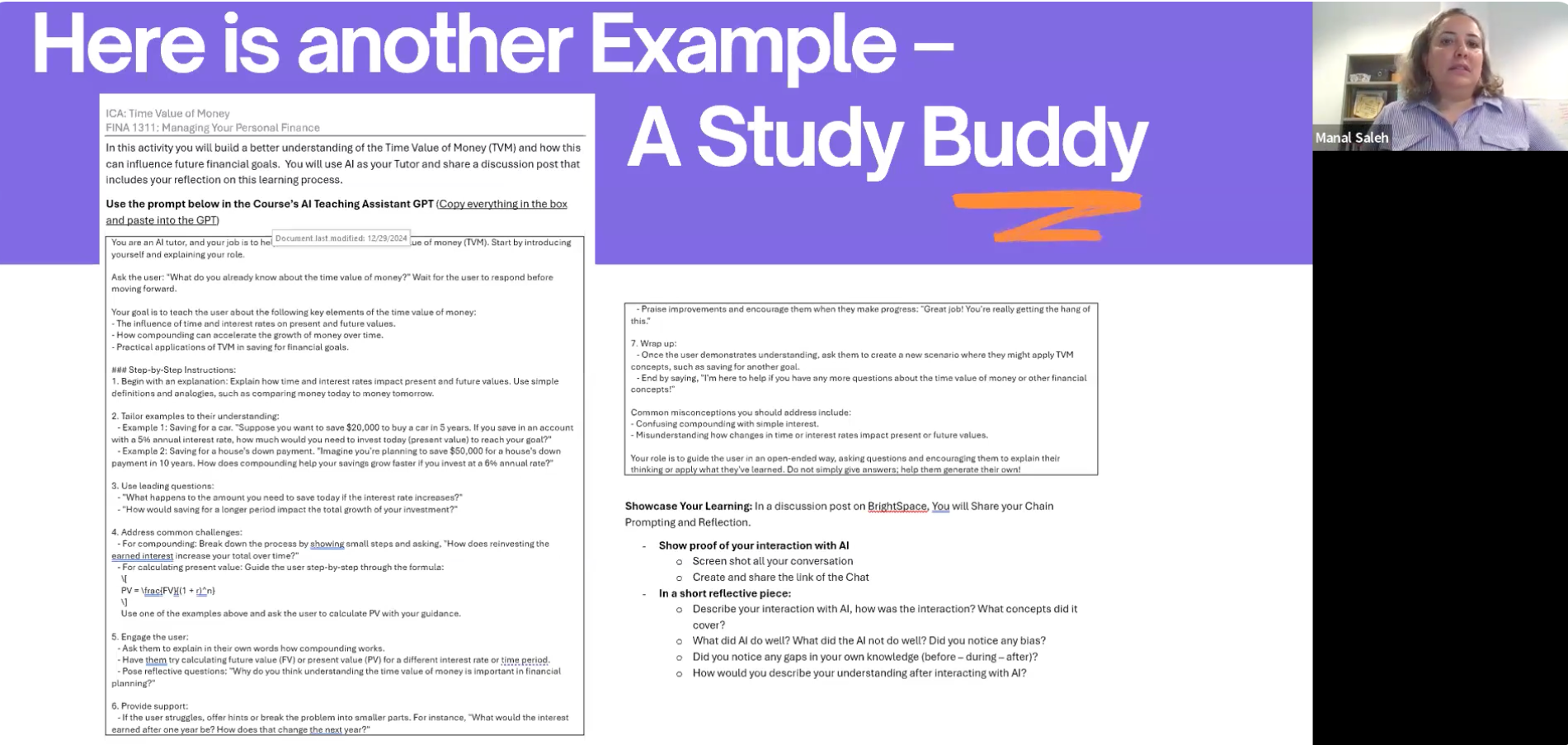

Saleh used a live in-class activity to show how AI could be used as a personal tutor. She gave students a long, structured prompt to paste into any generative AI model, to help them work through a concept they kept struggling with: the time value of money.

"This was a specific concept that my students were struggling with, which is time value of money,” she explained. “Just every time in an exam, if I ask them an open question about it, they're not able to articulate what does time value of money really mean?”

Rather than lecture again, she let students use AI to interact with the concept in a new way. The prompt positioned the AI as a tutor, starting from the student's current understanding and guiding them with examples and leading questions. Students spent 15 to 20 minutes in conversation with the AI, then reflected on the experience.

This wasn’t about getting the right answer: it was about helping students build confidence in their own explanations. It also showed how AI can be used to personalize learning, support independent study, and help students get unstuck, without replacing critical thinking.

“Hopefully you’ve interacted with it and you gave it your definition of time value of money,” Saleh said, “and it kind of built on that.”

Where to From Here

Saleh made it clear: responsible AI integration doesn’t have to be overwhelming. It could be a short statement in your syllabus or a clear AI-use guideline in your assignment instructions. “It doesn’t have to be that big and exhaustive… just a few lines in your assignment can initiate that conversation.”

She also encouraged educators to set clear boundaries, connect AI use to academic integrity policies, and guide students toward appropriate, productive use: “Just say it out and clear… What is the picture you want to see? How is the work going to be done in collaboration with AI?”

And while much of the session focused on post-secondary teaching, her final story reminded us of AI’s relevance beyond the classroom. She shared how she recently helped her daughter, who has ADHD, use Goblin Tools to break down a complex assignment:

“The topic was really too big for her. We needed to use AI to break it down… It did a really good job identifying the specific research areas and information she needed… It gave her pointers to overcome her writer's block and research block.”

To close the session, she invited a reflection on this question: How will you model the responsible use of AI for your students?

Would it be:

- A brainstorming engine?

- A debating counterpart?

- A guide on the side?

- A study buddy?

“Whatever role you choose,” she said, “be clear. Be intentional. And most importantly: be human. That’s where the magic happens.”

Watch the full replay here.