'Hallucitations': The New AI Plagiarism Problem

Check citations for accuracy with GPTZero's Bibliography Checker.

AI tools can speed things up – which is part of the appeal, and also the problem. For students feeling overwhelmed with their course loads, AI tools can feel incredibly tempting to use. Why spend hours reading a dense academic text when you could ask ChatGPT to summarize it in seconds?

But when we look at the bigger picture, a more complex issue emerges – one that goes beyond plagiarism and into something more foundational. Students aren’t always stealing words: sometimes, they’re outsourcing their thinking. And that raises a whole new set of questions.

What is AI Plagiarism (And Why Is It Different)?

Traditionally, plagiarism meant copying and pasting someone else’s work without attribution. This was clear-cut and more easily detectable, as it was a method that everyone was generally familiar with.

AI plagiarism is different. It’s murkier, as it doesn’t involve copying anyone directly. Instead, it involves relying on AI to do too much of the intellectual work. If a student uses AI to outline their argument, summarize sources, and suggest citations – what role did they actually play in the work?

The reality is that AI doesn’t just change how students write. It changes how they research, and that's where a lot of the real risks start to show up.

The Danger of Research Shortcuts

For many students, the logic is straightforward: academic papers can be long and overwhelming, and some of them end up not being relevant. As one said: “It’s too long to read sources, especially if some are irrelevant, or I just need a quick fact or statistic.”

The problem is that this kind of thinking can lead students to see research as a box-ticking exercise. Instead of engaging with material, they rely on AI to do the filtering, the understanding, and sometimes the writing – all while appearing like they’ve done the intellectual heavy lifting.

On the other side, teachers are noticing a frightening trend: “I’m worried that students will lose the ability to express original ideas in their own voices, and think that they are just a conduit for taking in information and regurgitating it.”

When students stop properly engaging with messy, complex source material, they also miss out on thinking processes like spotting bias, making connections, and learning about nuance. These are the very skills education is meant to develop.

Most importantly, they begin to lose their academic voice. If AI is shaping every summary and citation – the student arguably becomes invisible in the work. They risk slowly losing the ability to research, argue, and write independently.

A Credibility Crisis around ‘Hallucitations’

One of the biggest concerns in educational institutions at the moment is around credibility and verification. AI-generated citations might sound legitimate, with authentic journal titles, and author names that are familiar enough. Yet despite the pristine formatting, when you check the actual citations, they often don’t exist. In other words, AI tools can ‘hallucinate’ the citations – or, as Kate Crawford has called them, “hallucitations.”

As one academic we interviewed shared: “In scientific and academic research, the main concern is around credibility and verification. If [students’] citations don't match their references, or their citations or references do not support statements they are making, then it will definitely be a matter of concern and they will be considered as using AI.”

Even when citations do refer to accurate sources, they can still be problematic. Students may still misrepresent what the source actually says (especially if the student hasn’t actually read the source). The burden for verifying these citations then falls on teachers, which can be incredibly time-consuming. In fact, some teachers report that checking citations can double or even triple their marking time.

Ultimately, this isn’t just about catching “cheating”. The deeper issue is around making sure students are building arguments based on genuine evidence and not hallucinated support from a chatbot.

‘Hallucitations’ in Practice

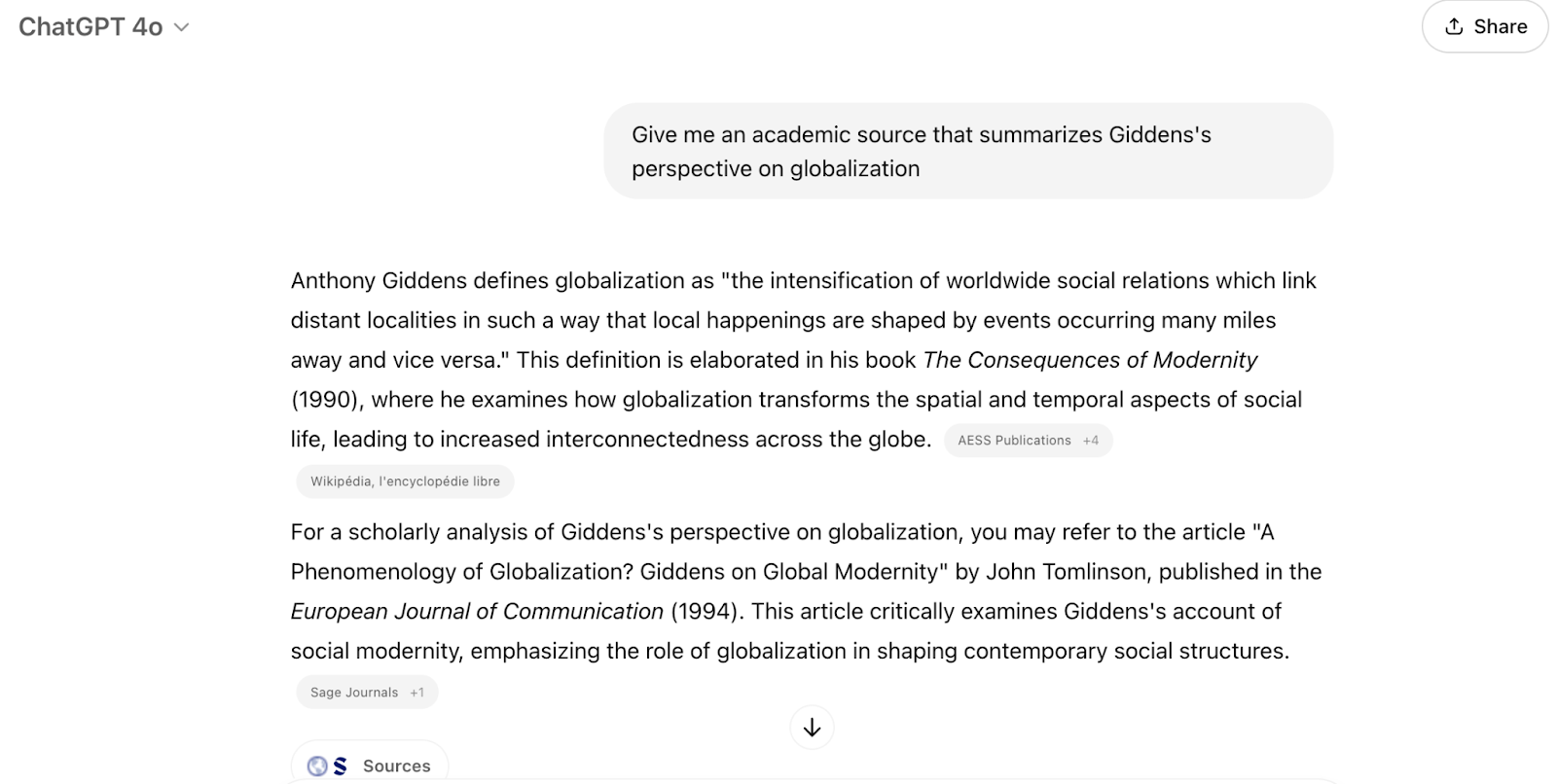

To demonstrate how ChatGPT can imagine sources that don’t exist, let’s take the example of Giddens’s theories on globalization.

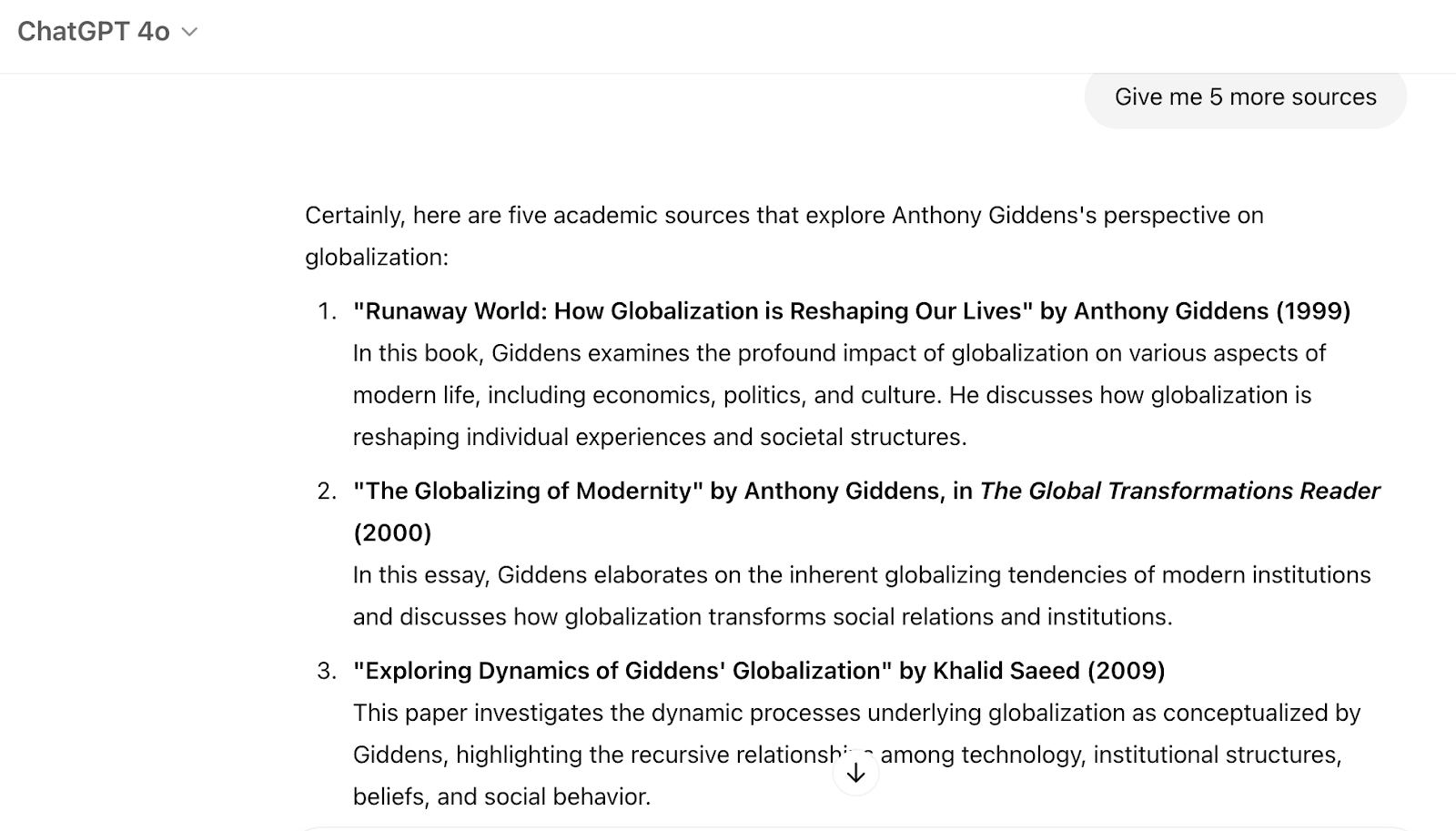

This looks accurate enough. Yet notably, when pushed for five more sources, this is where the issue of hallucitations starts – specifically with the third source cited:

When you click ‘Sources’ – it leads you to the suggested URLs. You can see the third one, supposedly by Khalid Saeed, is linked on the right.

However, when you click on the link, you can clearly see that Khalid Saeed is very much not the author:

So while it’s true that Khalid Saeed has contributed to the academic discourse around globalization, he is not the author of this specific work.

It’s a prime example of how important it is to remember the disclaimer that ChatGPT itself makes: “ChatGPT can make mistakes. Check important info.”:

What Can Educators Do?

This is a space that is evolving quickly. We recommend the “Swiss cheese” strategy for AI in education – combining layered, imperfect tools to protect learning and integrity. In this case, there are two steps we recommend taking to stop students from using AI tools as a research crutch.

Try GPTZero's plagiarism scan

GPTZero’s online plagiarism checker helps you identify plagiarized content by determining how much of the inputted text is original, which was found online, and from what sources. Just copy and paste text or upload a file into our plagiarism detector, and click ‘Scan’ to check for similar texts.

Demonstrate “Hallucitations”

Show students that AI-generated citations can often be false using your own relevant examples. Emphasize that the reason students are in your classroom is to learn and that by relying too heavily on AI tools, they are on a slippery slope to degrading their own learning abilities.

Ask for metacognitive reflection

Have students include a short note explaining how they approached the assignment. What tools did they use, and what decisions did they make? What did they struggle with? These simple additions help to reveal any red flags.

Require annotated bibliographies

Get students to briefly summarize what each source said and how it was used. This can show whether they actually understood and engaged with the research material that ideally forms the intellectual backbone of their argument.

Helping students use AI in a thoughtful way is an ongoing journey and is sure to evolve alongside the tools themselves. But for now, a powerful first step is showing them that AI tools aren’t always as credible as they are confident. Exposing the gap between what seems true and what is actually true can help students trust their own intellectual instincts – one of the core pillars of education in the first place.

You can verify citations quickly and effortlessly with GPTZero's Bibliography Checker.