Deloitte's Citation Situation & GPTZero's Citation Solution

GPTZero used our Citation Check to analyze the 234 page report and identified more than 30 issues out of the total 141 citations, including 19 hallucinations. Using GPTZero’s citation check would have saved ~$5000 per citation, all within minutes.

Earlier this year, University of Sydney academic Christopher Rudge unexpectedly created a costly headache for Deloitte Australia, simply by checking a few citations. While reading a new report from the international consulting company’s local firm, he stumbled across a reference to a book allegedly written by one of his colleagues at the University of Sydney, law professor Lisa Burton Crawford. He knew immediately that the book didn’t exist.

This discovery eventually led to an investigation that forced Deloitte to refund $98,000 (AUD) to the Australian government, while bringing up pertinent questions about responsible AI use in professional services.

GPTZero used our Citation Check to analyze the 234 page report and identified more than 30 issues out of the total 141 citations, including 19 hallucinations. You can see this table with all citations we found to be hallucinated, which were verified by a historian PhD expert on our team. Using GPTZero’s citation check would have saved ~$5000 per citation, all within minutes. If you're interested in learning more, you can reach out to our team here.

The Story

The Deloitte report in question was the result of a $440,000 (AUD) contract between the consulting firm and the Australian Department of Employment and Workplace Relations (DEWR). Published under the title Targeted Compliance Framework: Independent Review Final Report on July 4, 2025 (mirror), it reviewed the department’s system for imposing automated penalties on welfare recipients.

After Rudge approached the Australian Financial Review, reporters confirmed that the Burton citation was only one of many fabricated quotes, case references, articles, and other sources,

DEWR eventually identified more than 50 suspect citations in an internal review. In response to the controversy, Deloitte Australia updated the document to replace the problematic citations, acknowledged that the report had been produced with the help of AI, and apologized to DEWR. While Deloitte never admitted that the discussed citations were the result of generative AI, many observers (including Rudge) are convinced they are AI hallucinations.

Deloitte also agreed to refund $98,000 (AUD) to the Australian government — this worked out to about $5000 per fabricated citation. However, when our GPTZero team ran the report through our Citation Check tool, we discovered we could have saved Deloitte a lot of money.

The Solution

Our team used GPTZero’s Citation Check to analyze the report, just as we used it to check the MAHA report earlier this year. Citation Check is our solution to the epidemic of hallucinated sources threatening legal and scientific writing, journalism, and even textbooks. It helps both writers and readers by taking a complex job – verifying citations – and making it fast, accurate, and (relatively) pain-free.

Citation Check uses our AI agent, trained in house, to detect any hallucinations in your document, including AI-generated citations. Citation Check can also evaluate the accuracy of each cited claim and can even identify important uncited claims before hunting down supporting evidence.

The Report

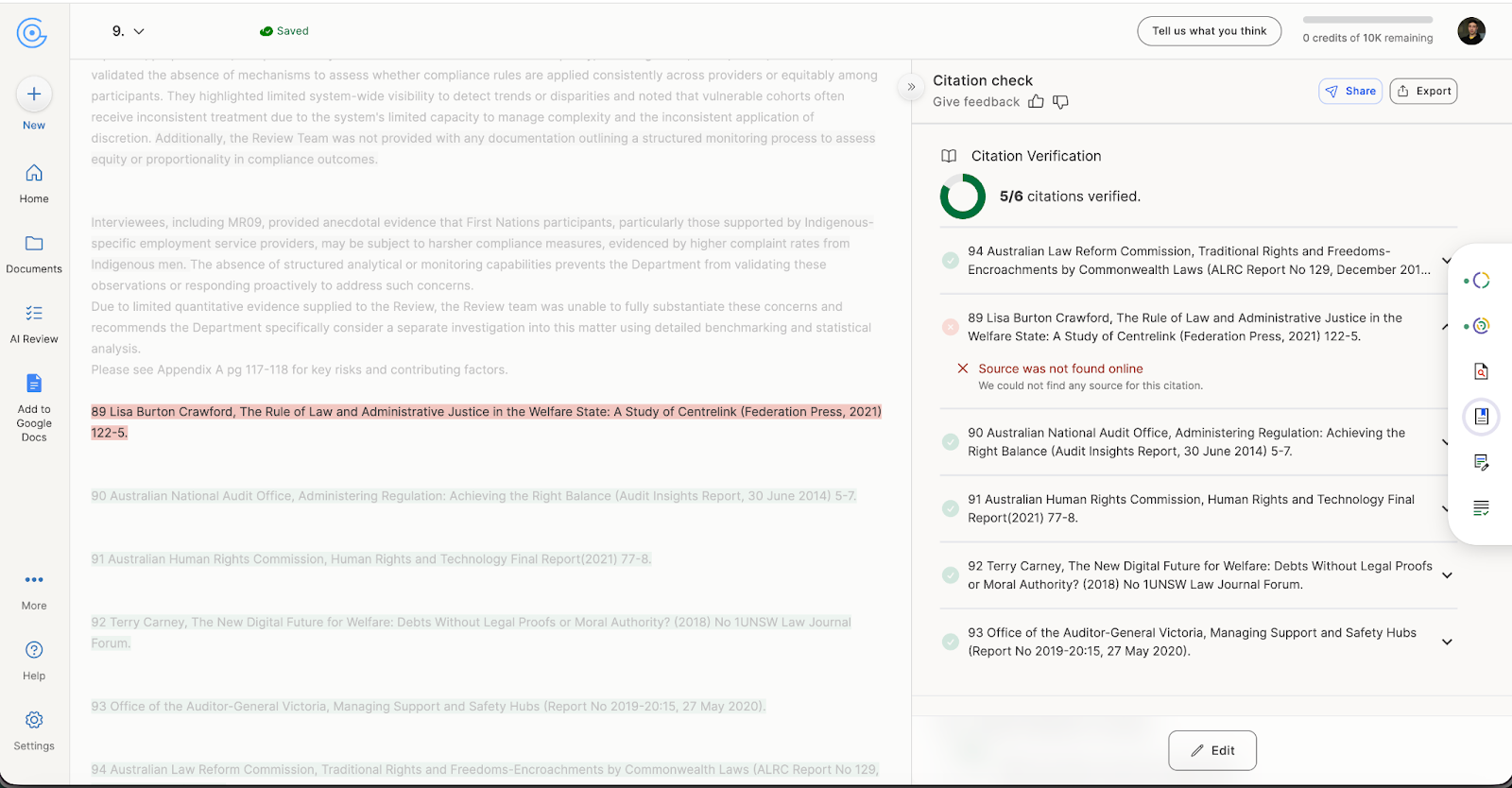

Deloitte’s original version of the report contained 141 citations. Citation Check identified more than 30 issues with these citations, including 20 probable hallucinations. For example, this scan of Chapter 9 indicates that citation 89 is fake.

After scanning the report, we compared the results to a version labelled manually by an expert on our team. Table 1 includes all the citations that both Citation Check and our expert agreed were either fake or unavailable online. Table 2 includes sources our tool coded yellow, usually because the citation was flawed, but pointed to a real source. Table 3 includes several complicated cases that Citation Check coded green, but which were flagged by our expert.

Table 1. Citation Check found 20 probable hallucinations. For context, we’ve also included the scans and the corresponding section of the report.

Table 2. Citation Check was unsure about these edge cases

Table 3. Citation Check disagreed with our human expert on these sources. However, while these citations are garbled, all the sources (arguably) exist.

Why Professionals are using Citation Check to reduce risk and time-to-compliance

Verifying citations is a grind, requiring time-consuming searches across multiple databases while maintaining a meticulous attention to detail. Our AI is able to take an hours long task and give professionals the right pointers in minutes.

In the Deloitte report, for example, Australian professor Lisa Burton Crawford is cited seven times. Crawford is a highly-regarded expert in public and constitutional law who published a book with Federation Press in 2017 titled The Rule of Law and the Australian Constitution. The report attributes two books to Crawford (48 and 55 in the above table). Both titles begin with the phrase “The Rule of Law” and both books are credited to Federation Press. According to Crawford, neither are real.

Citation Check also caught a speech sourced to “Justice Natalie Cujes Perry,” which is a hybrid of two real people: Australian Justice Melissa Perry and Australian academic Natalie Cujes. In fact, nearly all the hallucinations in the above tables are distorted versions of a real source, or mashups of several sources. This makes them especially hard to catch, even for experts, as today’s LLMs are trained to closely mimic human writing. One citation in particular was such a perfect edge case that our team spent nearly an hour arguing before deciding to include it in an above table. Modern consulting teams are using Citation Check with a human-in-the-loop to tackle these challenges seamlessly.

But is it accurate?

Since releasing Citation Check earlier this year, we’ve relentlessly improved its accuracy, speed, and user experience. Our false negative rate is now less than one percent, meaning that for every 100 hallucinated citations in a document, Citation Check will catch at least 99 of them.

Having spent hundreds of hours manually verifying citations over the last year, our team knows exactly how much time Citation Check could save students, teachers, journalists, analysts and other professionals.

Essentially, with the rise of AI, it’s becoming increasingly tempting to use it in day-to-day work. And that’s absolutely normal, as AI can help us to be more productive. But, like any tool invented by humans, it comes with a cost. In the case of AI, that cost is misinformation.

How to solve ‘hallucitations’

The Deloitte team’s problem wasn’t their use of an AI tool, but their lack of a tool to verify all elements of the tool’s output, including citations. Artificial intelligence inherits the drawbacks of its creators—human intelligence—including the tendency to be wrong. For modern LLMs, one of the major symptoms of this tendency is hallucination: the result of a model trying to be helpful at the expense of accuracy. Hallucinations are especially common in citations, making them an obvious indicator of AI use.

Prior to the development of LLMs, skeptics used to ask for references if they didn’t believe a fact or claim. Many use this strategy with LLMs as well, trying to avoid hallucinations by asking the model to provide a source – yet this is a trap that many have stumbled into.

Unlike humans, LLMs are extremely good at fabricating well-formatted references with plausible authors and titles. Because humans associate well-formatted citations with domain expertise, the skills displayed by an LLM lull us into thinking the cited claim is well-supported, especially since verifying citations is tedious.

Try GPTZero's Citation check for yourself, or reach out to GPTZero's sales team.