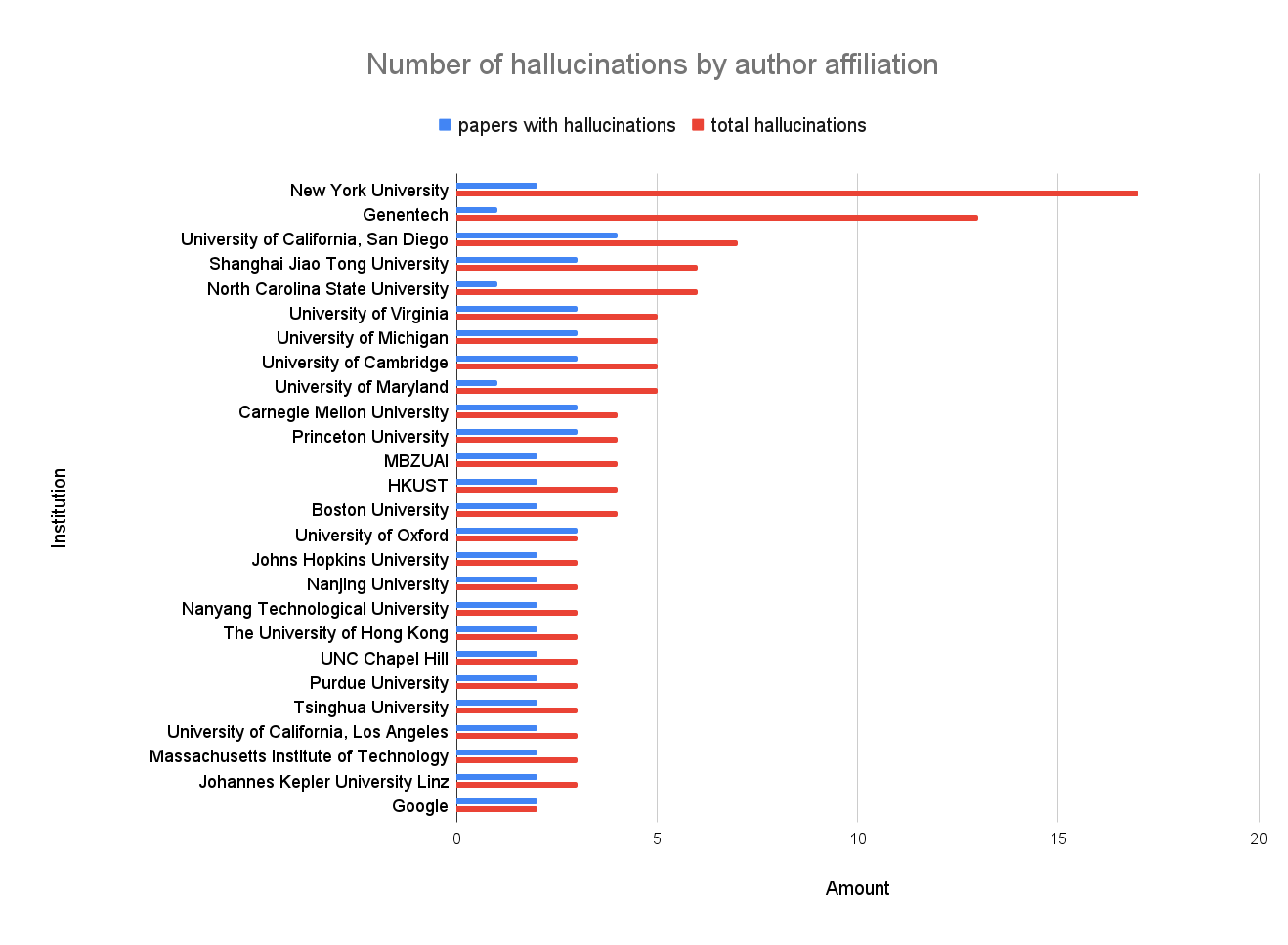

Published Paper | GPTZero Scan | Example of Verified Hallucination | Comment |

SimWorld: An Open-ended Simulator for Agents in Physical and Social Worlds | Sources

AI | John Doe and Jane Smith. Webvoyager: Building an end-to-end web agent with large multimodal models. arXiv preprint arXiv:2401.00001, 2024. | Article with a matching title exists here. Authors are obviously fabricated. arXiv ID links to a different article. |

Unmasking Puppeteers: Leveraging Biometric Leakage to Expose Impersonation in AI-Based Videoconferencing | Sources

AI* | John Smith and Jane Doe. Deep learning techniques for avatar-based interaction in virtual environments. IEEE Transactions on Neural Networks and Learning Systems, 32(12):5600-5612, 2021. doi: 10.1109/ TNNLS.2021.3071234. URL https://ieeexplore.ieee.org/document/307123 | No author or title match. Doesn't exist in publication. URL and DOI are fake. |

Unmasking Puppeteers: Leveraging Biometric Leakage to Expose Impersonation in AI-Based Videoconferencing | Sources

AI* | Min-Jun Lee and Soo-Young Kim. Generative adversarial networks for hyper-realistic avatar creation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pages 1234-1243, 2022. doi: 10.1109/CVPR.2022.001234. URL https://ieeexplore.ieee.org/ document/00123 | No author or title match. Doesn't exist in publication. URL and DOI are fake. |

SimWorld-Robotics: Synthesizing Photorealistic and Dynamic Urban Environments for Multimodal Robot Navigation and Collaboration | Sources

AI* | Firstname Lastname and Others. Drivlme: A large-scale multi-agent driving benchmark, 2023. URL or arXiv ID to be updated. | No title or author match. Potentially referring to this article, but year is off (2024) |

SimWorld-Robotics: Synthesizing Photorealistic and Dynamic Urban Environments for Multimodal Robot Navigation and Collaboration | Sources

AI* | Firstname Lastname and Others. Robotslang: Grounded natural language for multi-robot object search, 2024. To appear. | No title or author match. Potentially referring to this article, but year is totally off (2020). |

Efficient semantic uncertainty quantification in language models via diversity-steered sampling | Sources

AI | Nuo Lou and et al. Dsp: Diffusion-based span prediction for masked text modeling. arXiv preprint arXiv:2305.XXXX, 2023. | No title or author match and arXiv ID is incomplete. |

Efficient semantic uncertainty quantification in language models via diversity-steered sampling | Sources

AI | A. Sahoo and et al. inatk: Iterative noise aware text denoising. arXiv preprint arXiv:2402.XXXX, 2024. | No title or author match and arXiv ID is incomplete. |

Efficient semantic uncertainty quantification in language models via diversity-steered sampling | Sources

AI | Sheng Shi and et al. Maskgpt: Uniform denoising diffusion for language. arXiv preprint arXiv:2401.XXXX, 2024. | No title or author match and arXiv ID is incomplete. |

Efficient semantic uncertainty quantification in language models via diversity-steered sampling | Sources

AI | Asma Issa, George Mohler, and John Johnson. Paraphrase identification using deep contextualized representations. In Proceedings of the 2018 Conference on Empirical Methods in Natural Language Processing (EMNLP), pp. 517-526, 2018. | No author or title match. No match in publication. |

Efficient semantic uncertainty quantification in language models via diversity-steered sampling | Sources

AI | Yi Tay, Kelvin Fu, Kai Wu, Ivan Casanueva, Jianfeng Liu, Byron Wallace, Shuohang Wang, Bajrang Singh, and Julian McAuley. Reasoning with heterogeneous graph representations for knowledge-aware question answering. In Findings of the Association for Computational Linguistics: ACL 2021, pp. 3497-3506, 2021. | No exact author or title match, although this title is close. No match in the publication. |

Efficient semantic uncertainty quantification in language models via diversity-steered sampling | Sources

AI | Alex Wang, Rishi Bommasani, Dan Hendrycks, Daniel Song, and Zhilin Zhang. Efficient fewshot learning with efl: A single transformer for all tasks. In arXiv preprint arXiv:2107.13586, 2021. | No title or author match. ArXiv ID leads to a different article. |

Efficient semantic uncertainty quantification in language models via diversity-steered sampling | Sources

AI | Lei Yu, Jimmy Dumsmyr, and Kevin Knight. Deep paraphrase identification. In Proceedings of the 2014 Conference on Empirical Methods in Natural Language Processing (EMNLP), pp. $650-655,2014$. | No title or author match. No match in publication |

Efficient semantic uncertainty quantification in language models via diversity-steered sampling | Sources

AI | X. Ou and et al. Tuqdm: Token unmasking with quantized diffusion models. In ACL, 2024. | No title or author match. |

Efficient semantic uncertainty quantification in language models via diversity-steered sampling | Sources

AI | Franz Aichberger, Lily Chen, and John Smith. Semantically diverse language generation. In International Conference on Learning Representations (ICLR), 2025. | No title or author match. Some similarity to this article |

Efficient semantic uncertainty quantification in language models via diversity-steered sampling | Sources

AI | Maria Glushkova, Shiori Kobayashi, and Junichi Suzuki. Uncertainty estimation in neural text regression. In Findings of the Association for Computational Linguistics: EMNLP 2021, pp. $4567-4576,2021$. | No author or title match. No match in publication. |

Efficient semantic uncertainty quantification in language models via diversity-steered sampling | Sources

AI | Yichao Wang, Bowen Zhou, Adam Lopez, and Benjamin Snyder. Uncertainty quantification in abstractive summarization. In Proceedings of the 60th Annual Meeting of the Association for Computational Linguistics (ACL), pp. 1234-1245, 2022. | No author or title match. |

Efficient semantic uncertainty quantification in language models via diversity-steered sampling | Sources

AI | Mohit Jain, Ethan Perez, and James Glass. Learning to predict confidence for language models. In Proceedings of the 2021 Conference on Empirical Methods in Natural Language Processing (EMNLP), pp. 245-256, 2021. | No author or title match. No match in publication |

Efficient semantic uncertainty quantification in language models via diversity-steered sampling | Sources

AI | Srinivasan Kadavath, Urvashi Khandelwal, Alec Radford, and Noam Shazeer. Answer me this: Self-verifying large language models. In arXiv preprint arXiv:2205.05407, 2022. | No author or title match. ArXiv ID leads to a different article. |

Privacy Reasoning in Ambiguous Contexts | Sources

AI | Zayne Sprague, Xi Ye, Kyle Richardson, and Greg Durrett. MuSR: Testing the limits of chain-of-thought with multistep soft reasoning. In EMNLP, 2023. | Two authors are omitted and one (Kyle Richardson) is added. This paper was published at ICLR 2024. |

Memory-Augmented Potential Field Theory: A Framework for Adaptive Control in Non-Convex Domains | Sources

AI** | Mario Paolone, Trevor Gaunt, Xavier Guillaud, Marco Liserre, Sakis Meliopoulos, Antonello Monti, Thierry Van Cutsem, Vijay Vittal, and Costas Vournas. A benchmark model for power system stability controls. IEEE Transactions on Power Systems, 35(5):3627-3635, 2020. | The authors match this paper, but the title, publisher, volume, issue, and page numbers are incorrect. Year (2020) is correct. |

Memory-Augmented Potential Field Theory: A Framework for Adaptive Control in Non-Convex Domains | Sources

AI** | Mingliang Han, Bingni W Wei, Phelan Senatus, Jörg D Winkel, Mason Youngblood, I-Han Lee, and David J Mandell. Deep koopman operator: A model-free approach to nonlinear dynamical systems. Chaos: An Interdisciplinary Journal of Nonlinear Science, 30(12):123135, 2020. | No title or author match. Journal and other identifiers match this article. |

Adaptive Quantization in Generative Flow Networks for Probabilistic Sequential Prediction | Sources

AI | Francisco Ramalho, Meng Liu, Zihan Liu, and Etienne Mathieu. Towards gflownets for continuous control. arXiv preprint arXiv:2310.18664, 2023. | No author or title match. ArXiv ID matches this paper. |

Grounded Reinforcement Learning for Visual Reasoning | Sources

AI* | Arjun Gupta, Xi Victoria Lin, Chunyuan Zhang, Michel Galley, Jianfeng Gao, and Carlos Guestrin Ferrer. Robust compositional visual reasoning via language-guided neural module networks. In Advances in Neural Information Processing Systems (NeurIPS), 2021. | No title or author match. This paper has a similar title and matches publication. |

MTRec: Learning to Align with User Preferences via Mental Reward Models | Sources

AI | Diederik P. Kingma and Jimmy Ba. Deepfm: a factorization-machine based neural network for ctr prediction. In International Conference on Learning Representations, 2015. | Title matches this paper. Authors, date, and publisher match this paper. |

Redefining Experts: Interpretable Decomposition of Language Models for Toxicity Mitigation | Sources

AI* | Weijia Xu, Xing Niu, and Marine Carpuat. Controlling toxicity in neural machine translation. In Proceedings of the 2020 Conference on Empirical Methods in Natural Language Processing (EMNLP), pages 4245-4256, 2020. | Authors, publisher and date match this paper. Title and page numbers don't match. |

Redefining Experts: Interpretable Decomposition of Language Models for Toxicity Mitigation | Sources

AI* | Xiang Zhang, Xuehai Wei, Xian Zhang, and Xue Zhang. Adversarial attacks and defenses in toxicity detection: A survey. In Proceedings of the 2020 International Joint Conference on Neural Networks (IJCNN), pages 1-8. IEEE, 2020. | No author or title match. Doesn't exist in publication. |

Self-supervised Learning of Echocardiographic Video Representations via Online Cluster Distillation | Sources

AI* | Fenglin Ding, Debesh Jha, Maria Härgestam, Pål Halvorsen, Michael A Riegler, Dag Johansen, Ronny Hänsch, and Håvard Stensland. Vits: Vision transformer for video self-supervised pretraining of surgical phase recognition. In International Conference on Medical Image Computing and Computer-Assisted Intervention, pages 293-302. Springer, 2022. | No title or author match. Proceedings from this conference are split into volumes, but the citation doesn't have a volume number. |

PANTHER: Generative Pretraining Beyond Language for Sequential User Behavior Modeling | Sources

AI* | Humberto Acevedo-Viloria, Juan Martinez, and Maria Garcia. Relational graph convolutional networks for financial fraud detection. IEEE Transactions on Knowledge and Data Engineering, 33(7):1357-1370, 2021. doi: 10.1109/TKDE.2020.3007655. | No author or title match. Doesn't exist in the cited publication. |

PANTHER: Generative Pretraining Beyond Language for Sequential User Behavior Modeling | Sources

AI* | Majid Zolghadr, Mohsen Jamali, and Jiawei Zhang. Diffurecsys: Diffusion-based generative modeling for sequential recommendation. Proceedings of the ACM Web Conference (WWW), pages 2156-2165, 2024. doi: 10.1145/3545678.3557899. | No author or title match. DOI doesn't exist. |

LiteReality: Graphic-Ready 3D Scene Reconstruction from RGB-D Scans | Sources

AI | Bernd Kerbl, Thomas Müller, and Paolo Favaro. Efficient 3d gaussian splatting for real-time neural rendering. In CVPR, 2022. 2, 3 | Loosely matches this article, but only one author and part of the title actually match. |

LiteReality: Graphic-Ready 3D Scene Reconstruction from RGB-D Scans | Sources

AI | Punchana Khungurn, Edward H. Adelson, Julie Dorsey, and Holly Rushmeier. Matching real-world material appearance. TPAMI, 2015. 6 | No clear match. Two authors and the subject match this article. |

When and How Unlabeled Data Provably Improve In-Context Learning | Sources

AI | Ashish Kumar, Logan Engstrom, Andrew Ilyas, and Dimitris Tsipras. Understanding self-training for gradient-boosted trees. In Advances in Neural Information Processing Systems (NeurIPS), volume 33, pp. 1651-1662, 2020. | No title or author match. Doesn't exist in publication. |

When and How Unlabeled Data Provably Improve In-Context Learning | Sources

AI | Chuang Fan, Shipeng Liu, Seyed Motamed, Shiyu Zhong, Silvio Savarese, Juan Carlos Niebles, Anima Anandkumar, Adrien Gaidon, and Stefan Scherer. Expectation maximization pseudo labels. arXiv preprint arXiv:2305.01747, 2023. | This paper exists, but all the authors are fabricated. |

DualCnst: Enhancing Zero-Shot Out-of-Distribution Detection via Text-Image Consistency in Vision-Language Models | Sources

AI* | T. Qiao, W. Liu, Z. Xie, H. Xu, J. Lin, J. Huang, and Y. Yang, "Clip-score: A robust scoring metric for text-to-image generation," arXiv preprint arXiv:2201.07519, 2022. | No clear author or title matches. Title loosely matches this article. ArXiv ID leads here. |

Optimal Rates for Generalization of Gradient Descent for Deep ReLU Classification | Sources

AI | Yunwen Lei, Puyu Wang, Yiming Ying, and Ding-Xuan Zhou. Optimization and generalization of gradient descent for shallow relu networks with minimal width. preprint, 2024. | No title match. Authors match this paper. |

GeoDynamics: A Geometric State‑Space Neural Network for Understanding Brain Dynamics on Riemannian Manifolds | Sources

AI* | Uher, R., Goodman, R., Moutoussis, M., Brammer, M., Williams, S.C.R., Dolan, R.J.: Cognitive and neural predictors of response to cognitive behavioral therapy for depression: a review of the evidence. Journal of Affective Disorders 169, 94-104 (2014) | No exact title or author match. Loose title match with this article. Doesn't exist in the journal volume |

Robust Label Proportions Learning | Scan

AI* | Junyeong Lee, Yiseong Kim, Seungju Park, and Hyunjik Lee. Softmatch: Addressing the quantity-quality trade-off in semi-supervised learning. In Advances in Neural Information Processing Systems (NeurIPS), volume 36, pages 18315-18327, 2023. | Title matches this paper. No match in NeurIPS volume 36. |

NFL-BA: Near-Field Light Bundle Adjustment for SLAM in Dynamic Lighting | Sources

AI | Z. Zhu, T. Yu, X. Zhang, J. Li, Y. Zhang, and Y. Fu. Neuralrgb-d: Neural representations for depth estimation and scene mapping. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2022. | No author or title match. Doesn't exist in publication. |

NFL-BA: Near-Field Light Bundle Adjustment for SLAM in Dynamic Lighting | Sources

AI | Y. Zhang, M. Oswald, and D. Cremers. Airslam: Illumination-invariant hybrid slam. In International Conference on Computer Vision (ICCV), pages 2345-2354, 2023. | No author or title match. Doesn't exist in publication. |

Geometric Imbalance in Semi-Supervised Node Classification | Sources

AI | Yihong Zhu, Junxian Li, Xianfeng Han, Shirui Pan, Liang Yao, and Chengqi Wang. Spectral contrastive graph clustering. In International Conference on Learning Representations, 2022. | No title or author match. This paper has a similar title, but there's no match in the ICLR 2022 database. |

Geometric Imbalance in Semi-Supervised Node Classification | Sources

AI | Ming Zhong, Han Liu, Weizhu Zhang, Houyu Wang, Xiang Li, Maosong Sun, and Xu Han. Hyperbolic and spherical embeddings for long-tail entities. In ACL, pages 5491-5501, 2021. | No author or title match. Doesn't exist publication. |

NUTS: Eddy-Robust Reconstruction of Surface Ocean Nutrients via Two-Scale Modeling | Sources

AI* | Ye Gao, Robert Tardif, Jiale Cao, and Tapio Schneider. Artificial intelligence reconstructs missing climate information. Nature Geoscience, 17:158-164, 2024. doi: 10.1038/s41561-023-01297-2. | Title and publisher match this article. Issue, page numbers, and year match this article. DOI is fabricated. |

NUTS: Eddy-Robust Reconstruction of Surface Ocean Nutrients via Two-Scale Modeling | Sources

AI* | Étienne Pardoux and Alexander Yu Veretennikov. Poisson equation for multiscale diffusions. Journal of Mathematical Sciences, 111(3):3713-3719, 2002. | Authors have frequently published together on the "poisson equation", but this title doesn't match any of their publications. Doesn't exist in publication volume/issue. |

Test-Time Adaptation of Vision-Language Models for Open-Vocabulary Semantic Segmentation | Sources

AI* | Charanpal D Mummadi, Matthias Arens, and Thomas Brox. Test-time adaptation for continual semantic segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pages 11828-11837, 2021. | No title or author match. Doesn't exist in publication. |

Test-Time Adaptation of Vision-Language Models for Open-Vocabulary Semantic Segmentation | Sources

AI* | Jiacheng He, Zhilu Zhang, Zhen Wang, and Yan Huang. Autoencoder based test-time adaptation for semantic segmentation. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), pages 998-1007, 2021. | No author or title match. Doesn't exist in publication. |

Global Minimizers of ℓp-Regularized Objectives Yield the Sparsest ReLU Neural Networks | Sources

AI | M. Gong, F. Yu, J. Zhang, and D. Tao. Efficient $\ell_{p}$ norm regularization for learning sparsity in deep neural networks. IEEE Transactions on Neural Networks and Learning Systems, 33(10): $5381-5392,2022 | No title or author match. |

SHAP Meets Tensor Networks: Provably Tractable Explanations with Parallelism | Sources

AI | Mihail Stoian, Richard Milbradt, and Christian Mendl. NP-Hardness of Optimal TensorNetwork Contraction and Polynomial-Time Algorithms for Tree Tensor Networks. Quantum, 6:e119, 2022. | The authors match this article and the title is similar. However, the year, publisher and other data don't match. This article didn't appear in the 2022 Quantum volume. |

SHAP Meets Tensor Networks: Provably Tractable Explanations with Parallelism | Sources

AI | Jianyu Xu, Wei Li, and Ming Zhao. Complexity of Optimal Tensor Network Contraction Sequences. Journal of Computational Physics, 480:112237, 2023. | No title or author match. Doesn't exist in publication. |

Learning World Models for Interactive Video Generation | Sources

AI | Patrick Esser, Robin Rombach, and Björn Ommer. Structure-aware video generation with latent diffusion models. arXiv preprint arXiv:2303.07332, 2023. | Authors match this article. ArXiv ID leads to a different article. |

Fourier Token Merging: Understanding and Capitalizing Frequency Domain for Efficient Image Generation | Sources

AI | Lele Xu, Chen Lin, Hongyu Zhao, and et al. Gaborvit: Global attention with local frequency awareness. In European Conference on Computer Vision (ECCV), 2022. | No author or title match. No match in publication. |

Fourier Token Merging: Understanding and Capitalizing Frequency Domain for Efficient Image Generation | Sources

AI | Yoonwoo Lee, Jaehyeong Kang, Namil Kim, Jinwoo Shin, and Honglak Lee. Structured fast fourier transform attention for vision transformers. In Advances in Neural Information Processing Systems (NeurIPS), 2022. | No author or title match. Doesn't exist in publication. |

Fourier Token Merging: Understanding and Capitalizing Frequency Domain for Efficient Image Generation | Sources

AI | Siyuan Gong, Alan Yu, Xiaohan Chen, Yinpeng Lin, and Larry S Davis. Vision transformer compression: Early exiting and token pruning. In Advances in Neural Information Processing Systems (NeurIPS), 2021. | No author or title match. No match in publication. |

Fourier Token Merging: Understanding and Capitalizing Frequency Domain for Efficient Image Generation | Sources

AI | Jiuxiang Shi, Zuxuan Wu, and Dahua Lin. Token-aware adaptive sampling for efficient diffusion models. In IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 2023. | No author or title match. Doesn't exist in publication. |

Fourier Token Merging: Understanding and Capitalizing Frequency Domain for Efficient Image Generation | Sources

AI | Raphael Muller, Simon Kornblith, and Geoffrey Hinton. Adavit: Adaptive tokens for efficient vision transformer. In Proceedings of the International Conference on Machine Learning (ICML), 2021. | Authors match this article. Title matches this article. No match in publication. |

Fourier Token Merging: Understanding and Capitalizing Frequency Domain for Efficient Image Generation | Sources

AI | Xin Wang, Anlin Chen, Lihui Xie, Xin Jin, Cheng Wang, and Ping Luo. Not all tokens are equal: Efficient transformer for tokenization and beyond. In Advances in Neural Information Processing Systems (NeurIPS), 2021. | No author or title match. This article title is similar. No match in publication. |

A Unified Stability Analysis of SAM vs SGD: Role of Data Coherence and Emergence of Simplicity Bias | Sources

AI | Z. Chen and N. Flammarion. When and why sam generalizes better: An optimization perspective. arXiv preprint arXiv:2206.09267, 2022. | No author or title match. ArXiv ID leads to a different paper. |

A Unified Stability Analysis of SAM vs SGD: Role of Data Coherence and Emergence of Simplicity Bias | Sources

AI | K. A. Sankararaman, S. Sankararaman, H. Pandey, S. Ganguli, and F. Bromberg. The impact of neural network overparameterization on gradient confusion and stochastic gradient descent. In 37th International Conference on Machine Learning (ICML), pages 8469-8479, 2020. | This paper is a match, but all authors but the first (K. A. Sankararaman) are fabricated. |

MaterialRefGS: Reflective Gaussian Splatting with Multi-view Consistent Material Inference | Sources

AI | Why Physically-Based Rendering. Physically-based rendering. Procedia IUTAM, 13(127137):3, 2015 . | No author given and title appears to be garbled. Publisher, issue, year, and pages match this article. |

Robust Reinforcement Learning in Finance: Modeling Market Impact with Elliptic Uncertainty Sets | Sources

AI | Pierre Casgrain, Anirudh Kulkarni, and Nicholas Watters. Learning to trade with continuous action spaces: Application to market making. arXiv preprint arXiv:2303.08603, 2023. | No title or author match. ArXiv ID matches a different article. |

Robust Reinforcement Learning in Finance: Modeling Market Impact with Elliptic Uncertainty Sets | Sources

AI | Z Ning and Y K Kwok. Q-learning for option pricing and hedging with transaction costs. Applied Economics, 52(55):6033-6048, 2020. | No author or title match. No match in journal volume/issue. |

Robust Reinforcement Learning in Finance: Modeling Market Impact with Elliptic Uncertainty Sets | Sources

AI | W L Chan and R O Shelton. Can machine learning improve delta hedging? Journal of Derivatives, $9(1): 39-56,2001$. | No author or title match. No match in journal volume/issue. |

Robust Reinforcement Learning in Finance: Modeling Market Impact with Elliptic Uncertainty Sets | Sources

AI | Petter N Kolm, Sebastian Krügel, and Sergiy V Zadorozhnyi. Reinforcement learning for optimal hedging. The Journal of Trading, 14(4):4-17, 2019. | No author or title match. There is no volume 14 of this journal. |

Robust Reinforcement Learning in Finance: Modeling Market Impact with Elliptic Uncertainty Sets | Sources

AI | Kyung Hyun Park, Hyeong Jin Kim, and Woo Chang Kim. Deep reinforcement learning for limit order book-based market making. Expert Systems with Applications, 169:114338, 2021. | No author or title match. Publisher ID matches this article. |

FlowMixer: A Depth-Agnostic Neural Architecture for Interpretable Spatiotemporal Forecasting | Sources

AI | Moonseop Han and Elizabeth Qian. Robust prediction of dynamical systems with structured neural networks: Long-term behavior and chaos. Physica D: Nonlinear Phenomena, 427:133006, 2021. | No author or title match. Publisher ID matches this article. |

FlowMixer: A Depth-Agnostic Neural Architecture for Interpretable Spatiotemporal Forecasting | Sources

AI | Bart De Schutter and Serge P Hoogendoorn. Modeling and control of freeway traffic flow by state space neural networks. Neural Computing and Applications, 17(2):175-185, 2008. | No title match, although Schutter and Hoogendorn have written or coauthored several related papers (example and example). Journal volume/issue matches an unrelated article. |

FlowMixer: A Depth-Agnostic Neural Architecture for Interpretable Spatiotemporal Forecasting | Sources

AI | Jaideep Pathak, Brian R Hunt, Georg M Goerg, and Themistoklis P Sapsis. Data-driven prediction of chaotic dynamics: Methods, challenges, and opportunities. Annual Review of Condensed Matter Physics, 14:379-401, 2023. | No author or title match. No match in journal volume. |

FlowMixer: A Depth-Agnostic Neural Architecture for Interpretable Spatiotemporal Forecasting | Sources

AI | Alejandro Güemes, Stefano Discetti, and Andrea Ianiro. Coarse-grained physics-based prediction of three-dimensional unsteady flows via neural networks. Science Advances, 7(46):eabj0751, 2021. | No title or author match. Doesn't exist in journal volume/issue. |

BNMusic: Blending Environmental Noises into Personalized Music | Sources

AI* | Jeongseung Park, Minseon Yang, Minz Won Park, and Geonseok Lee. Diffsound: Differential sound manipulation with a few-shot supervision. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) Workshops, pages 1767-1775, 2021. | No title or author match. Doesn't exist in publication. |

Towards Multiscale Graph-based Protein Learning with Geometric Secondary Structural Motifs | Sources

AI* | Wenxuan Sun, Tri Dao, Hongyu Zhuang, Zihang Dai, Albert Gu, and Christopher D Manning. Llamba: Efficient llms with mamba-based distillation. arXiv preprint arXiv:2502.14458, 2024. | ArXiv ID leads to this article with a similar title and one matching author. |

Towards Multiscale Graph-based Protein Learning with Geometric Secondary Structural Motifs | Sources

AI* | Tri Dao, Shizhe Ma, Wenxuan Sun, Albert Gu, Sam Smith, Aapo Kyrola, Christopher D Manning, and Christopher Re. An empirical study of state space models for large language modeling. arXiv preprint arXiv:2406.07887, 2024. | Two authors (Tri Dao and Albert Gu), the arXiv ID, and the year match this paper. However, the title is only a partial match. |

Fourier Clouds: Fast Bias Correction for Imbalanced Semi-Supervised Learning | Sources

AI | Junyan Zhu, Chenyang Li, Chao He, and et al. Freematch: A simple framework for long-tailed semi-supervised learning. In NeurIPS, 2021. | No author or title match. This paper title is very close, but it was published by ICLR 2023 not NeurIPS 2021. |

NormFit: A Lightweight Solution for Few-Shot Federated Learning with Non-IID Data | Scan

AI | Yijie Zang et al. Fedclip: A federated learning framework for vision-language models. In NeurIPS, 2023. | No author or title match, although this title is close. No match in publication. |

AI-Generated Video Detection via Perceptual Straightening | Sources

AI | Jiahui Liu and et al. Tall-swin: Thumbnail layout transformer for generalised deepfake video detection. In ICCV, 2023. | No author or title match. A paper with a similar title appears in publication. |

Multi-Expert Distributionally Robust Optimization for Out-of-Distribution Generalization | Sources

AI* | Nitish Srivastava and Ruslan R Salakhutdinov. Discriminative features for fast frame-based phoneme classification. Neural networks, 47:17-23, 2013. | No title match, but authors have published together previously (example). No match in publication. |

MIP against Agent: Malicious Image Patches Hijacking Multimodal OS Agents | Sources

AI | Anh Tuan Nguyen, Shengping Li, and Chao Qin. Multimodal adversarial robustness: Attack and defense. IEEE Transactions on Pattern Analysis and Machine Intelligence (TPAMI), 2021. | No author or title match. Doesn't exist in publication. |

ACT as Human: Multimodal Large Language Model Data Annotation with Critical Thinking | Sources

AI* | Jack Lau, Ankan Gayen, Philipp Tschandl, Gregory A Burns, Jiahong Yuan, Tanveer SyedaMahmood, and Mehdi Moradi. A dataset and exploration of models for understanding radiology images through dialogue. In Proceedings of the Conference on Empirical Methods in Natural Language Processing (EMNLP), pages 2575-2584, 2018. | No author match. Title matches another hallucinated citation in this paper. Doesn't exist in publication. |

OCTDiff: Bridged Diffusion Model for Portable OCT Super-Resolution and Enhancement | Sources

AI | Yikai Zhang et al. "Text-to-Image Diffusion Models with Customized Guidance". In: Proceedings of the IEEE/CVF International Conference on Computer Vision. 2023. | No author or title match. Doesn't exist in publication. |

OCTDiff: Bridged Diffusion Model for Portable OCT Super-Resolution and Enhancement | Sources

AI | Author Song and AnotherAuthor Zhang. "Consistency in Diffusion Models: Improving Noise Embeddings". In: IEEE Transactions on Pattern Analysis and Machine Intelligence (2023). URL: https://arxiv.org/abs/2304.08787. | No author or title match. This paper has a similar title. ArXiv ID leads to unrelated paper. |

Strategic Costs of Perceived Bias in Fair Selection | Sources

AI | Claudia Goldin. Occupational choices and the gender wage gap. American Economic Review, 104(5):348-353, 2014. | Author is a famous economist, but the title doesn't match any of her works. Journal and locators match this unrelated article. |

Linear Transformers Implicitly Discover Unified Numerical Algorithms | Sources

AI | Olah, C., Elhage, N., Nanda, N., Schiefer, N., Jones, A., Henighan, T., and DasSarma, N. (2022). Transformer circuits. Distill, 7(3). https://distill.pub/2022/circuits/. | Most authors match this paper, but the title, publisher, and year are different. Doesn't exist in publication. |

Linear Transformers Implicitly Discover Unified Numerical Algorithms | Sources

AI | Nanda, N. (2023). Progress in mechanistic interpretability: Reverse-engineering induction heads in GPT-2. | No title match. Author may be Neel Nanda, who wrote several similar articles in 2023. |

A Tri-Modal Multi-Agent Responsive Framework for Comprehensive 3D Object Annotation | Sources

AI* | J. Zhang and X. Li. Multi-agent systems for distributed problem solving: A framework for task decomposition and coordination. Procedia Computer Science, 55:1131-1138, 2015. | No author or title match. Doesn't exist in publication. |

A Tri-Modal Multi-Agent Responsive Framework for Comprehensive 3D Object Annotation | Sources

AI* | Erfan Aghasian, Shai Avidan, Piotr Dollar, and Justin Johnson. Hierarchical protocols for multi-agent 3d scene understanding. In CVPR, pages 7664-7673, 2021. | No author or title match. Doesn't exist in publication. |

Learning Grouped Lattice Vector Quantizers for Low-Bit LLM Compression | Sources

AI* | Itay Hubara, Matthieu Courbariaux, Daniel Soudry, Rami El-Yaniv, and Yoshua Bengio. Quantizing deep convolutional networks for efficient inference: A whitepaper. arXiv preprint arXiv:1612.01462, 2017. | Authors mostly match this paper. Title matches this paper. ArXiv ID matches a third paper. |

Learning Grouped Lattice Vector Quantizers for Low-Bit LLM Compression | Sources

AI* | Zhiqiang Wang, Chao Zhang, Bing Li, Zhen Xu, and Zhiwei Li. A survey of model compression and acceleration for deep neural networks. ACM Computing Surveys, 54(7):1-34, 2021. | No author match. Title matches this paper. Doesn't exist in publication. |

PointMapPolicy: Structured Point Cloud Processing for Multi-Modal Imitation Learning | Sources

AI* | Andrew Black et al. Zero-shot skill composition with semantic feature fusion. arXiv preprint arXiv:2310.08573, 2023. | No title match. ArXiv ID leads to unrelated paper. |

PointMapPolicy: Structured Point Cloud Processing for Multi-Modal Imitation Learning | Sources

AI* | Yufei Wu, Kiran Alwala, Vivek Ganapathi, Sudeep Sharma, Yilun Chang, Yicheng Zhang, Yilun Zhou, et al. Susie: Scaling up instruction-following policies for robot manipulation. arXiv preprint arXiv:2402.17552, 2024. | No author or title match. ArXiv ID leads to unrelated article. |

FLAME: Fast Long-context Adaptive Memory for Event-based Vision | Sources

AI | Zhipeng Zhang, Chang Liu, Shihan Wu, and Yan Zhao. EST: Event spatio-temporal transformer for object recognition with event cameras. In ICASSP 2023 - 2023 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), pages 1-5. IEEE, 2023. | No author or title match. Doesn't exist in publication. |

FLAME: Fast Long-context Adaptive Memory for Event-based Vision | Sources

AI | Daniel Gehrig, Mathias Gehrig, John Monaghan, and Davide Scaramuzza. Recurrent vision transformers for dense prediction. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV) Workshops, pages 3139-3148, 2021. | No author match, but this paper has a similar title. Doesn't exist in publication. |

Diversity Is All You Need for Contrastive Learning: Spectral Bounds on Gradient Magnitudes | Sources

AI** | Qiyang Du, Ozan Sener, and Silvio Savarese. Agree to disagree: Adaptive learning with gradient disagreement. In Advances in Neural Information Processing Systems (NeurIPS), 2021. | No author or title match. Sener and Savarese have published together previously. Doesn't exist in publication. |

Diversity Is All You Need for Contrastive Learning: Spectral Bounds on Gradient Magnitudes | Sources

AI** | Longxuan Jing, Yu Tian, Yujun Pei, Yibing Shen, and Jiashi Feng. Understanding contrastive representation learning through alignment and uniformity on the hypersphere. In International Conference on Learning Representations (ICLR), 2022. | No author match. Title matches this paper. Doesn't exist in publication. |

TokenSwap: A Lightweight Method to Disrupt Memorized Sequences in LLMs | Sources

AI* | Yair Leviathan, Clemens Rosenbaum, and Slav Petrov. Fast inference from transformers via speculative decoding. In ICML, 2023. | Title, publisher, and date match this paper, but all authors except one surname (Leviathan) are different. |

TokenSwap: A Lightweight Method to Disrupt Memorized Sequences in LLMs | Sources

AI* | Wenwen Chang, Tal Schuster, and Yann LeCun. Neural surgery for memorisation: Locating and removing verbatim recall neurons. In NeurIPS, 2024. | No author or title match. Doesn't exist in publication. |

Learning the Wrong Lessons: Syntactic-Domain Spurious Correlations in Language Models | Sources

AI | M. Garcia and A. Thompson. Applications of llms in legal document analysis. Journal of Legal Technology, 7(1):50-65, 2024. | No author or title match. Publication doesn't exist. |

Learning the Wrong Lessons: Syntactic-Domain Spurious Correlations in Language Models | Sources

AI | J. Smith and A. Patel. Leveraging large language models for financial forecasting. International Journal of Financial Technology, 9(2):101-115, 2024. | No author or title match. Publication doesn't exist. |

JADE: Joint Alignment and Deep Embedding for Multi-Slice Spatial Transcriptomics | Sources

AI* | David Jones et al. Gpsa: Gene expression and histology-based spatial alignment. Nature Methods, 2023. | No author or title match. Doesn't exist in publication. |

JADE: Joint Alignment and Deep Embedding for Multi-Slice Spatial Transcriptomics | Sources

AI* | Zhihao Chen, Hantao Zhang, Yuhan Zhang, Zhanlin Hu, Quanquan Gu, Qing Zhang, and Shuo Suo. Slat: a transformer-based method for simultaneous alignment and clustering of spatial transcriptomics data. Nature Communications, 14(1):5548, 2023. | No author or title match. Doesn't exist in publication. |

Improved Regret Bounds for Linear Bandits with Heavy-Tailed Rewards | Sources

AI | François Baccelli, Gérard H. Taché, and Etienne Altman. Flow complexity and heavytailed delays in packet networks. Performance Evaluation, 49(1-4):427-449, 2002. | No author or title match. Doesn't exist in publication. |

Improved Regret Bounds for Linear Bandits with Heavy-Tailed Rewards | Sources

AI | Saravanan Jebarajakirthy, Paurav Shukla, and Prashant Palvia. Heavy-tailed distributions in online ad response: A marketing analytics perspective. Journal of Business Research, 124:818-830, 2021. | No author or title match. Doesn't exist in publication. |

AutoSciDACT: Automated Scientific Discovery through Contrastive Embedding and Hypothesis Testing | Sources

AI | Mehdi Azabou, Micah Weber, Wenlin Ma, et al. Mineclip: Multimodal neural exploration of clip latents for automatic video annotation. arXiv preprint arXiv:2210.02870, 2022. | No author or title match. ArXiv ID leads to unrelated article. |