Google’s New AI Detection Watermarking – Will It Work?

GPTZero’s top AI detection team weighs in on Google’s SynthID watermarking announcement — what it is, what it's not, and its implications.

This week, Google DeepMind announced it is open-sourcing a method for creating a detectable AI “watermark,” or a way to alter how a generative AI large language model (LLM) produces things like text, images, and videos so that one can build custom detection.

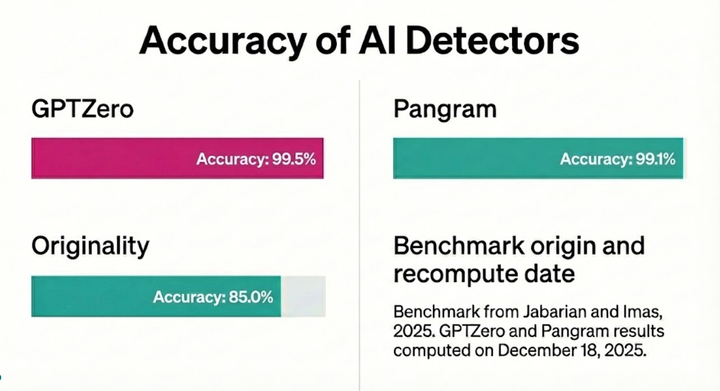

As the leading AI detection company with a dedicated machine learning team, we’ve been following this update with interest. We’ve found limitations to watermarking in the past and see the sustained need for an independent layer of detection. Our understanding is that Google’s latest announcement, SynthID, doesn’t currently change much in the world of genAI and detection, and here’s why.

What Google’s watermarking currently is:

- An open-source way for other LLMs to build their own watermark and then build a separate detector to detect that watermark.

- Similar to methods already developed by Open AI in 2022 that restricts or modifies the way an AI model 'pseudorandomly' selects its words; that pattern is then shared with the detector.

- A method that works for text, images, and video – we think this is the most impressive aspect, seemingly without significantly degrading the quality of the outputs, which is a major concern with most forms of watermarking by nature.

What Google’s watermarking currently isn't:

- A commercially available AI detector for the general public.

- Able to detect ChatGPT, Llama, Claude or any of the other more popular AI models.

- Able to combat common watermark bypassing methods, such as running a text through translation (e.g. English to Polish and back to English) or asking a different AI model to paraphrase it.

Why did Google release a watermark when other AI detectors, like OpenAI, have not?

We hope it’s because Google does recognize the need for more transparency onto the prevalence of its generative AI outputs in the world. But it seems that in May 2024, Google announced it integrated SynthID into Gemini, but it hasn’t released any detection except for its own team. At the time of open-sourcing and writing this post, our team was unable to find a way to detect SynthID in Gemini outside of the limited demo.

So, while this release is helpful for a company making LLMs to be able to detect itself, this actually isn’t so helpful for millions of educators concerned over academic integrity, writers concerned with originality, and citizens concerned over deepfakes and AI-driven misinformation.

If Google were to release to developers a secure and reliable way of accessing its abilities to detect its own watermark, GPTZero would be the first to integrate any offering they have into our product.

But we also have seen AI companies hesitate to allow everyone to detect your AI tools. There is a conflict of interest for companies who stand to benefit from more AI content; you don’t necessarily want to release tools that make your content easily distinguishable if your users would rather the content be passed off as "real."

What this means for AI detection

At GPTZero, we support any effort to increase transparency around the use of AI and prevent generative AI from taking over to the point of model degradation. Watermarks might be one step in the path toward solutions, but even if every model comes out with a watermark method and a commercially available way of detecting itself, the darker demand for unwatermarked content means someone will always need to be there to be the source of truth around multi-model generative AI content. That's what we're here for, as a champion for independent AI detection.

Interested in learning more about watermarking or AI detection? Feel free to reach out to our team.