Red Flags of AI Misuse by Job Seekers

Tips for hiring managers and recruiters to spot candidates who misrepresent their skills and accomplishments using AI.

Job-hunting can be a grueling game of scale and repetition. These days, many candidates are reasonably using AI tools to streamline their job hunt process. But if you’re a hiring manager, you’ve likely come across a new issue with the post-ChatGPT hiring world – some candidates are using AI in ways that may misrepresent their skill set and fails to align to the job’s or company’s expectations.

Misusing AI in job seeking can look like: compensating for insecurities around English language skills with an AI-generated resume or cover letter, asking ChatGPT to fabricate work experiences, or responding to technical assessments with the help of AI tools instead of one's own knowledge. This post covers common red flags of AI misuse by job seekers and as well as how to design a hiring process that is still fair and adaptable.

How big of a problem is AI misuse in job hunting?

Many recruiters, especially in tech fields, don't necessarily mind (and may react positively) to a candidate using AI to punch up their bios and make applying more efficient. But the risks of, for example, AI-generated resumes, is that candidates can hide their true skills or personality behind AI, making it a lot harder to identify the best candidate for a role. The resumes might look polished on the surface but lack the personalization that gives a true and accurate assessment of a candidate's genuine qualifications and experience.

This makes it harder than ever for hiring managers to tell the difference between a truly exceptional candidate and one who just leaned on AI to make themselves sound more qualified than they actually are until far later in the process.

While candidates think they’ve saved time, they’ve just created more work for hiring managers, who may ultimately reject them because they sound like everyone else.

“We've certainly seen an uptake in candidates submitting AI-generated CVs,” says Elspeth Coates-Gibson, Chief Commercial Officer at Appointedd. “Working in a tech business, I completely appreciate the need to scale and efficiently use AI. Utilizing such tools makes sense, but when screening candidates, I’m also keen to understand how they pitch themselves and how they market and sell their unique skill set. Often this nuance is lost through AI, and there’s so much value that can be understood by seeing how a person pitches themselves in their own words. As such, it’s great to see where candidates have used a hybrid approach.”

Other instances of AI misuse are more serious. Some jobs, like programming and technical support, can require technical assessments as part of the interview process.

Speaking under anonymity, technical recruiters we've spoken to have reported an uptick in candidates who have amazing scores on online or take-home assessments who then severely fail in-person evaluation of the same skills, likely because they were using ChatGPT to help them respond to problems rather than relying on their own knowledge.

What are the warning signs of an AI-generated candidates?

One popular application tracking system, Ashby, came up with a list of "red flags" of what they called "fake candidates," including:

- Often the applicants have a background in web/mobile applications, multiple programming languages, and blockchain technology

- Applicant’s accounts (LinkedIn, Github, etc.) only contain very basic information.

- Discrepancies in number of LinkedIn profile connections not being consistent with the length of their pretended career

- Some of the information on resumes is copied from real individuals or top talent

As the leading detector of AI, Here are some of our signs to watch out for when it comes specifically to spotting candidates who may be faking their backgrounds with AI:

A polished-but-generic resume or cover letter tone

AI resumes sound like a “greatest hits” version of industry language and can be just as bad, if not worse than most human written resumes. This could contain language like:

- “Proven track record of delivering dynamic solutions to optimize organizational efficiency.”

- “Exceptional expertise in cross-functional collaboration and stakeholder engagement.”

But what do those phrases actually mean? They might sound impressive at first but they usually aren’t actually saying much – and don’t tend to match the candidate’s profile or level of experience. They also don’t reflect a candidate’s individual voice or personality.

Accomplishments that the candidate can't explain well

Similarly, sometimes AI can generate specific accomplishments listed on the resume, but the candidate can't explain any context around them when asked in person. Some AI resumes include achievements like:

- “Increased revenue by 200% in six months” – but candidate can't specify the project, team size, or strategy.

- “Developed and executed cutting-edge strategies for market penetration” – but candidate cannot explain the ‘how.’

Unrealistic skill mastery for the industry or career level

AI resumes tend to have a laundry list of technical skills, often at “expert” level, which realistically would take years to master. For example, if a recent graduate whose only experience involves a couple of internships claims “expert-level proficiency in strategic leadership, business development, crisis management, and corporate finance” – high-level skills which take years to develop.

Our own recruiting team for machine learning at GPTZero has said that claiming expertise in too many fields, especially in the realm of AI skills, is a red flag.

Other red flags

Besides the above, recruiting platform Ashby points out other red flags that can point towards candidates with questionable veracity, including: missing personal social media profiles, a strict preference for remote-only jobs, and discrepancies in their accents versus their claimed nationality. Candidates who keep their cameras off in interviews or request laptops to be shipped to different addresses than what they’ve previously provided can also raise red flags.

How to Check for AI-generated Resumes

The more AI tools become more widely used in the job-hunting process, the easier it will be to spot AI-generated resumes. However, there are also some specific steps that can streamline the process:

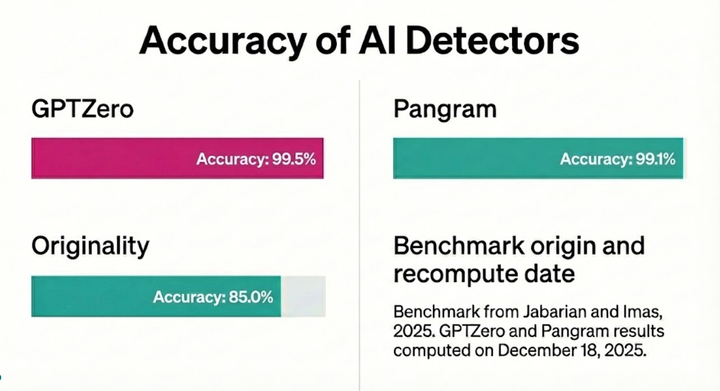

AI detection tools for resumes

Similar to AI plagiarism detectors, there are tools designed to flag machine-generated text in resumes. Platforms like GPTZero can analyse text patterns to see whether a resume might have been AI-generated. No tool is 100% foolproof but they can give a helpful starting point and save valuable time, by pointing out the patterns typically seen in AI-generated text (e.g. overly formal structure).

Cross-check with AI tools

Another option is to use your own AI tools to reverse-engineer the writing process. For example, you could input a suspicious bullet point into ChatGPT and see if it generates similar phrasing. This can help you spot suspicious patterns and compare language styles.

Repeated phrases

When you’re skimming the resume, you should quickly be able to sense whether some phrases are used over and over again. AI-generated resumes often include repetitive use of keywords from the job description so that candidates can optimize them for applicant tracking systems. Phrases like ‘passionate about driving innovation’ or ‘dedicated to fostering organizational growth’ tend to pop up frequently in AI-generated resumes.

Building Fairness into the Screening Process

AI is here to stay, whether we like it or not. If you’re trying to cast a wide net in order to find the best candidate, your goal isn’t to outsmart AI or to automatically ban candidates from using them but instead to refine your evaluation process.

“From recently going through a hiring process and scouring an enormous amount of resumes, it’s been interesting to see how AI is being used by job seekers in their resumes,” says Lisa Shaver, International Senior Product Marketing Manager at Toast. “I’m seeing more tailored resumes for the job – people taking their standard resume and making sure it better fits with the company ethos and job description for the role. This feels like the right use of AI to me – it takes away a time-consuming process and provides inspiration for how to better get your resume noticed by recruiters.”

“Tweaks, edits, areas to improve – these are where AI really shines and is a helpful tool for job seekers,” she continues. “Where AI falls flat is the often business-jargony, over-embellished language (but also very generic) that brings no real texture to a job seeker's previous responsibilities or accomplishments. When using AI for resumes – as with a lot of what we’ve learned about AI so far – using it to improve or enhance is more effective than using it as a replacement for creating a resume from scratch.”

While the natural response is to dismiss AI-generated resumes as a sign of dishonesty, AI tools can actually help candidates overcome language barriers, lack of confidence, or lack of experience in resume writing.

Instead of automatically disqualifying candidates who have used AI tools, a fairer approach could be to instead:

Focus on substance

Be on the lookout for specific, verifiable achievements over polished language – clear and true examples of success are way more valuable than lots of random buzzwords. When a candidate can articulate a real challenge they faced, instead of leaning on corporate jargon, this can be a better indicator of their experience.

Be transparent

Tell candidates if and how you’ll assess authenticity during the hiring process – give them a heads up if you’ll use skills tests or AI detection tools so candidates are aware of your process. If candidates know AI-generated resumes are being treated differently in the screening process, they might be less inclined to use AI tools to write their resume.

Reward transparency

Equally, give candidates the option to openly share if they’ve used AI tools in creating their resume, by including a section in the application form where they can disclose and explain how they did so. It doesn’t have to go into extreme detail – it’s just a space to bring more honesty and clarity into the process.

Ask clarifying questions

In the interview process, probe a little deeper into high-level resume statements (e.g. “Tell me more about how you achieved that 200% revenue growth. What were some of the specific steps you took?”) Get the candidate to walk you through the details of any examples of achievement they’ve put on the resume.

Final thoughts

Spotting an AI-generated resume isn’t about punishing candidates, as AI tools are only set to become more ubiquitous as time goes on. Instead, a fairer approach is to intentionally create a hiring process that rewards authenticity and transparency – and to use an AI detector to quickly verify which resumes have been entirely AI-generated.

A resume will only ever share part of the story of a candidate’s professional experience. Getting to the heart of whether a candidate is a good fit comes down to asking questions that give them the space to share details about how they got to where they are today, and where they see themselves in the future.