How Will AI Detection Change the Future of Journalism?

An overview of how AI detection is becoming an essential tool for accuracy and accountability in the media industry.

Mobile phones ushered in the era of citizen journalism. Social media platforms allowed anyone to broadcast events in real time. Technology has never been far from influencing the way we consume information about what’s happening around the world: now, AI is the next technology set to have a lasting influence on journalism.

While we’ve heard about AI tools that can generate content and analyze swathes of data, here we explore how AI detection (the technology that can identify AI-generated text, images, and even voices) is becoming an essential tool for accuracy and accountability in the media industry.

Benefits of AI Detection Technologies

AI detection tools can make news production more efficient and reliable, as well as opening up new product avenues for a digitally savvy audience. By automating repetitive tasks like source verification, AI detection can free up journalists to focus on deeper stories.

With AI detection unveiling the authenticity of what’s published, readers can trust that their news is verified and human-generated, which brings a new layer of accountability to the industry.

Speeding up news production

As David Caswell explains in an in-depth investigative piece, generative AI’s immediate opportunity in journalism is to make news production more efficient. Automating routine tasks can help newsrooms work faster, although Caswell makes it clear that this “efficiency-first” approach is just a starting point. It assumes newsrooms will keep working the same way in an industry that is actually evolving extremely quickly with new AI-driven media products.

Still, it is obvious that AI has the power to transform how we experience news, making it faster, more efficient, and tailored to our personal interests like never before. With its ability to sift through massive amounts of data, spot trends, and highlight patterns, AI can keep us updated on the stories that matter most, delivering the news that's most relevant to us.

AI-powered news aggregators and personalization algorithms can help, too, by analyzing our reading habits and suggesting articles that match our interests, saving us the time of searching for what’s useful. This means we can get to the info we actually want without any hassle.

New product development

Caswell argues that new audiences are likely to expect new types of news products, and as readers start to expect more control over their news experiences, newsrooms need to keep up.

AI makes this a lot easier as it opens up creative ways to experience the news. Imagine AI-generated audio and video content that makes stories more immersive, or virtual and augmented reality experiences that bring stories to life like never before.

To stand out, he talks about the need for publications to lean into what AI cannot easily replicate: exclusive reporting, rigorous verification, and in-depth coverage on topics like local news and climate reporting.

Spotting fake news faster

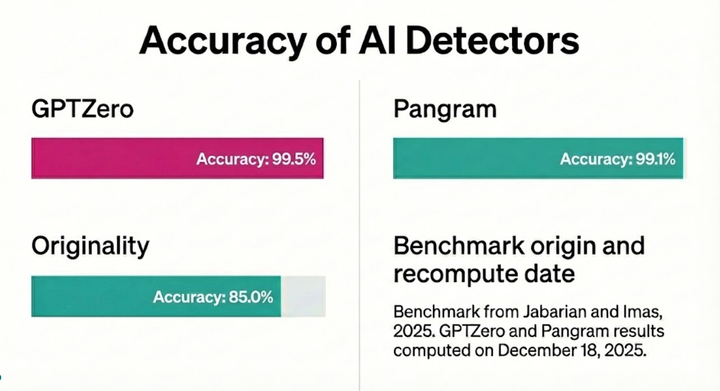

We’ve all seen headlines about fake news, doctored images, and deepfake videos. As these techniques get more sophisticated, they also get harder to detect. AI detection technology becomes essential here. It can function like a digital lie detector, helping journalists verify sources, images, and information instantly. Instead of spending hours confirming if a photo is real or if the origin of a quote, journalists can use AI detection to flag suspicious content quickly.

This could save massive amounts of time and help newsrooms avoid unintentionally spreading misinformation. This helps preserve the credibility in news (as the public’s trust in the media isn’t what it used to be) as AI-generated content could complicate things even more.

If readers start to suspect that articles, interviews, or even entire news stories could be AI-generated, trust in journalism might take another hit. By using AI detection tools as safeguards against misinformation, news organizations can assure their readers that the content they’re consuming is real, researched, and human-created.

For journalists, AI detection might become as fundamental as fact-checking. Just as they verify facts, they’ll need to verify whether the content has been AI-generated, giving readers confidence in the authenticity of the news. Journalists will need to show readers that the stories they are consuming are genuinely produced by humans (or, marked as AI-assisted).

Challenges posed by AI Detection

As well as new benefits come new challenges: AI detection tools can mistakenly flag human-written articles, bringing up tough questions about integrity in journalism. Detection also means extra scrutiny for freelance journalists, with new expectations around originality – affecting job contracts and pay rates as the industry leans towards transparency and human authenticity.

New ethical dilemmas

Say you’re a journalist who wrote an article using an AI tool for brainstorming or fact-gathering. You’ve then built on that, added quotes, researched context, and written it in your own voice. But a detection tool flags it as AI-generated. Does that mean your work is less legitimate?

The line between AI assistance and AI authorship is blurry, and AI detection is likely to bring these questions into sharper focus. Journalists and editors will need to establish guidelines on where to draw the line. Was the AI tool a brainstorming partner or a ghostwriter? Determining this balance will be crucial to maintaining transparency with readers.

Implications for freelancers

For freelance journalists, AI detection may change how work is valued. With more freelancers and contributors than ever, newsrooms will want reassurance that submitted pieces are original. This could mean an increase in pre-publication AI checks on articles. While this could be positive for journalists who pride themselves on that originality, the move could also lead to extra scrutiny.

This, in turn, could potentially impact pay rates. Freelancers may need to disclose whether they used AI, even if it was just to refine a sentence. This level of transparency could change contracts, rates, and job expectations, shifting the industry to value originality and ‘human touch’ over efficiency.

The Future of Skills in Journalism

While AI detection remains a relatively new technology, soon enough editors will have to decide if and how to label content as ‘AI-assisted’ or not. Journalists will need to know how detection technology works, its limitations, and when to rely on it and when to dig deeper – as part of their 21st-century toolkit. The need for ethical training around AI use is also likely to grow.

The Potential for AI-Assisted Content to Co-Exist

What about a future where some AI content is openly allowed? This could include financial reports or game recaps, or stories that are data-heavy but low on analysis. Some newsrooms might embrace AI generation for these, with full transparency, while applying strict detection for investigative pieces and opinion columns.

AI detection tools could help balance things. Rather than eliminating AI, newsrooms might label content as either ‘AI-assisted’ or ‘human-generated.’ This could make journalism more efficient without sacrificing trust, giving readers insight into how their news was created. Readers could see at a glance whether they’re reading a computer-generated update or a deeply-researched feature, creating a more transparent, multi-layered newsroom.

A new part of the 21st century journalist’s toolkit

As AI detection becomes standard, modern journalists will need to know how it works. It will become part of their toolkit, like conducting an interview or fact-checking or knowing how to navigate a content management system. Training in AI detection will likely include understanding its limits (no tool is foolproof) – and knowing when to trust the tech or dig deeper will be crucial.

Future journalists might even be trained in the ethics of AI, helping them navigate when it is okay to use AI in their work and when it is better to leave it out.

The Bottom Line

AI detection is set to become a central element in the future of journalism. While it can protect credibility and prevent the spread of misinformation, it also introduces layered questions about originality and the role of AI in news.

We can assume that the collective goal is a media landscape that’s fast and reliable, where – most importantly – readers know what’s real, and what’s not. AI detection will not solve all of journalism’s challenges, but it could be a major step toward a future where we can have greater trust in exactly what we are reading about the world around us.